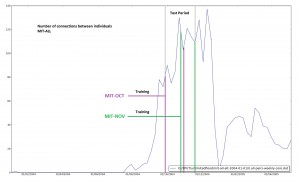

Had a meeting with Pádraig; we discussed using MOSES to get the list of communities of cell towers.

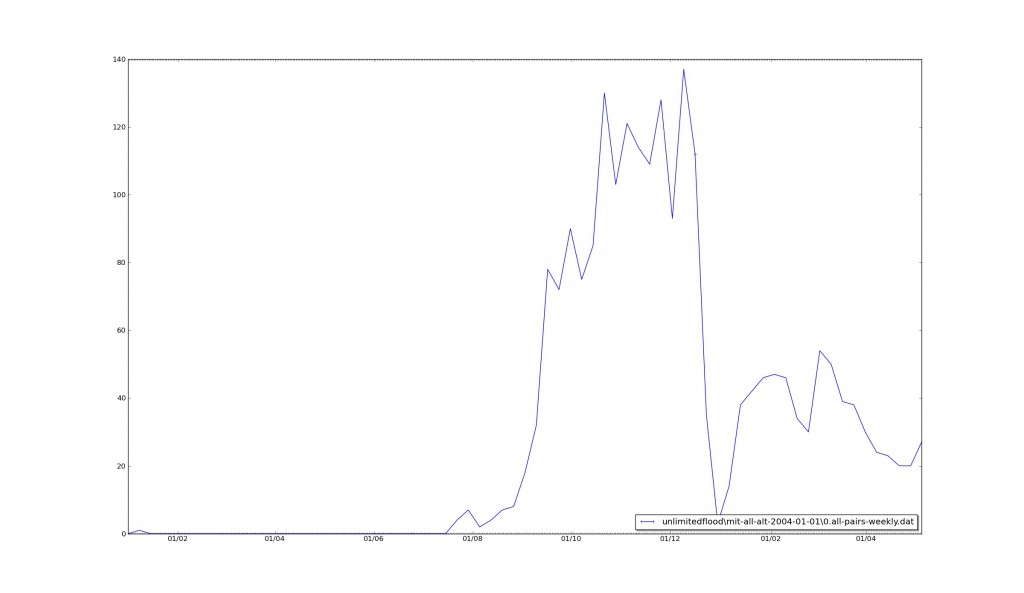

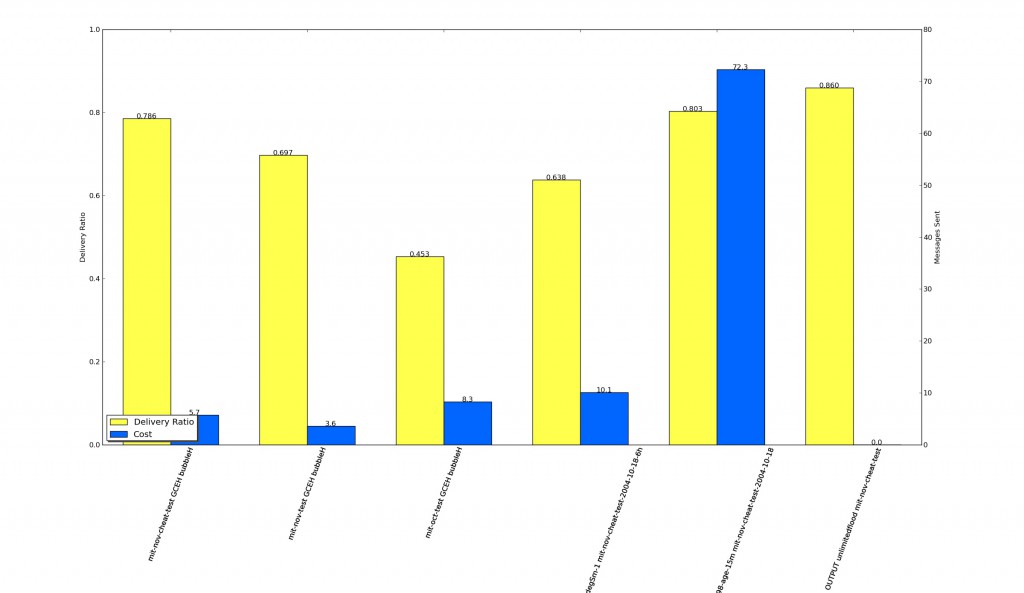

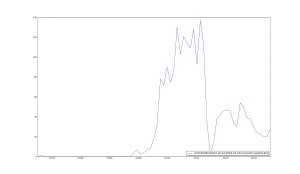

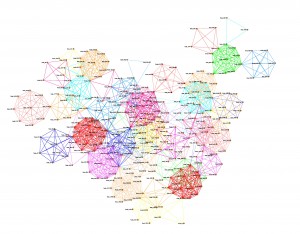

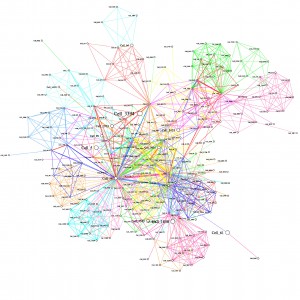

To recap, two cell towers are linked, if during a contact (Bluetooth) between two people, they are spotted by either person. This generates a huge furball of when visualised. I installed MOSES on a server, and ran it on the graph generated from MIT Oct 2004. It produced 66 Communities. There are 468 nodes in the dataset. The average number of communities per node is 2.17.

Padraig suggested visualising the data, and colouring each community in turn, so that we might be able to get an idea about which communities we can remove (as they are too big), and will leave us with smaller subsets of the graph, which identify locations better.

We can then use these communities as the ‘locations’ in location based routing. We need to determine whether it matters if a node report multiple ‘locations’ at the same time.

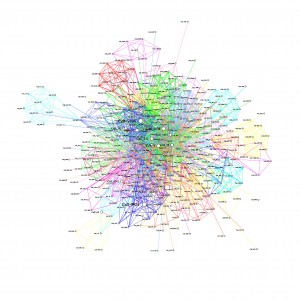

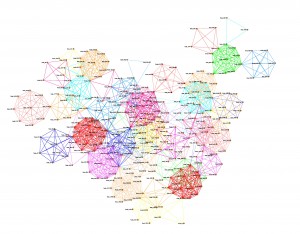

I started to view the communities colours as suggested, but it still showed a very large furball, so I decided to see what happenned when the highly connected nodes are removed. In the image below, we can see that when the top 100 highly connected nodes are removed, it becomed clear that there are distinct groups of cell towers.

MIT Oct 2004, when two users see each other, the cell towers they see are linked. This version has the top 100 highest connected (degree) nodes removed. Edges show community membership as given by MOSES.

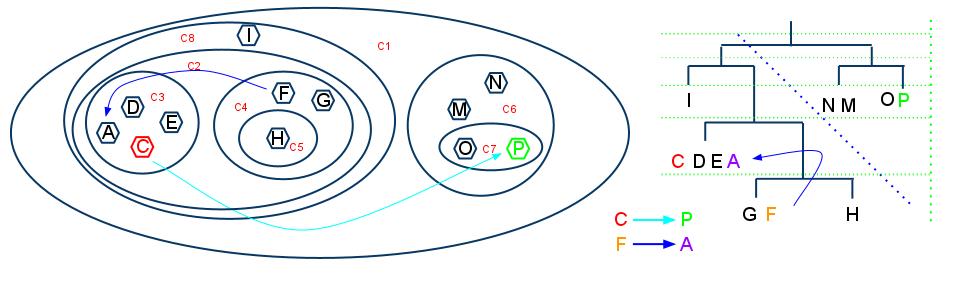

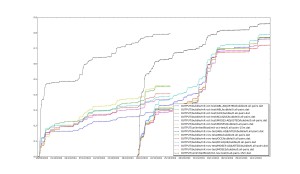

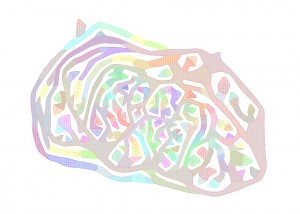

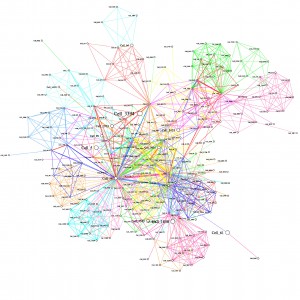

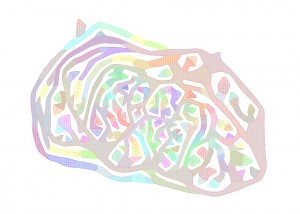

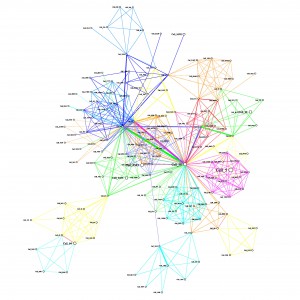

I sent on the moses output, and list edges to Aaron McDaid and Daniel Archambault, who genereated the Euler diagram below using Tulip.

the layout in the visualization is based solely on the communities found by moses. Tulip attempts to lay out the sets in an Euler diagram such that communities are placed nearby if they share nodes in common.

Euler Diagram generated using Tulip from the output of MOSES for cell towers connected in MIT Oct 2004.

I have yet to speak to Aaron in more detail about what this diagram means, but if I have interpreted the visualization correctly, the similar coloured areas are collections of nearby nodes; seperated into distinct clusters, rather than overlapping ones. If it is possible to extract this clustering, it might provide exactly the location clustering we need, if we remove the very large clusters (light brown/green).

I took some time to review the way that cell towers are linked, to make sure that it was making a fair linking, ready for when MOSES did it’s thing. As it is, it is a little loose in how it determined whether two cells are linked, as it links all cells that are seen in an entire contact period. This means that cells that are linked when two people are travelling together. I plan to work on a more strict approach, where the duration of the cell sightings for each person are compared, and cells linked only when it is clear that they are at the same time. However, I continued using the results we already have.

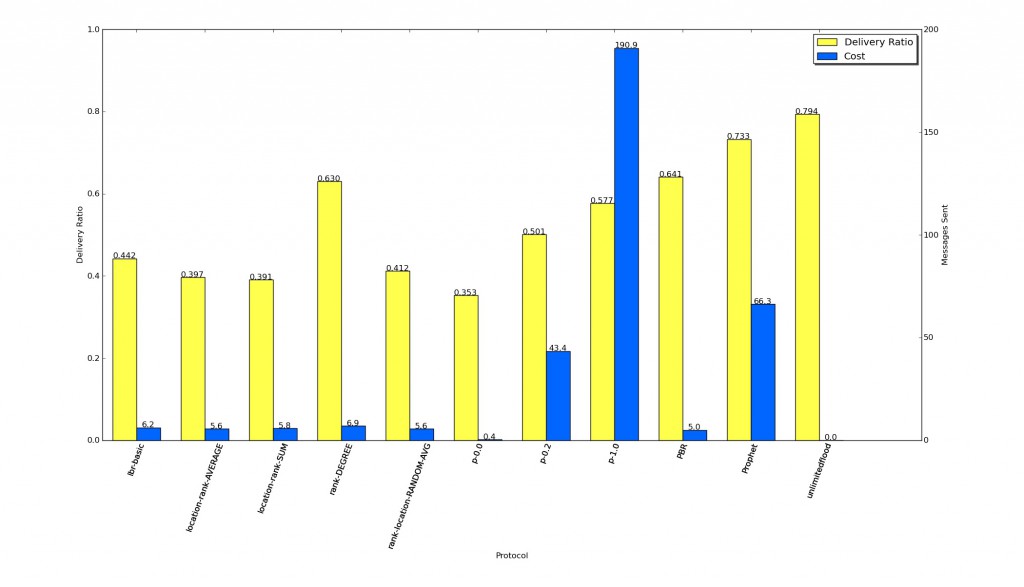

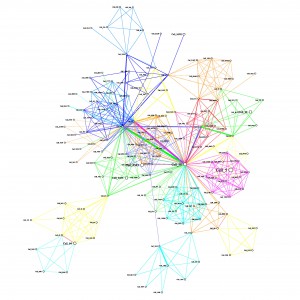

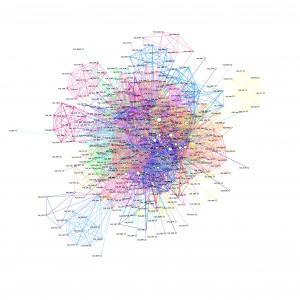

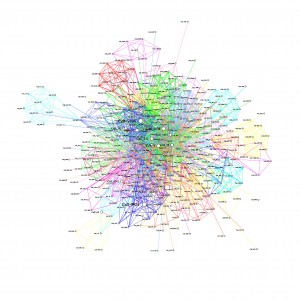

The images below show the graphs, when the top N communities are removed. The size of the node relates to the number of times a cell tower is reported, using binning.

The most number of times a node is spotted is 9291, the smallest is 1, so

9291 - 1 / 10 bins = bin size of 929.

For example, if a node is spotted 1043 times, then it will be placed into bin 2.

The width of the edge relates to the number of times an edge is reported between its two vertices, again using binning. The most number of times an edge is spotted is 3676, minimum 1. The average however, is only 8.38, and the median is only 3, so the binning really only distinguishes the very highly seen nodes.

The colour of the edges is related to the community membership. Because nodes can be members of multiple communities, and therefore have multiple edges, I made the decision to create only one edge, and distinguish it using the concatenation of the community names (e.g. Community_0Community4…), so nodes that edges that make up the same communities, will have the same colour. This might not have been the best way to do it, but the software used does not show multiple edges between the same nodes. An alternative would be to make the width of the edge equal to the number of communities it represents.

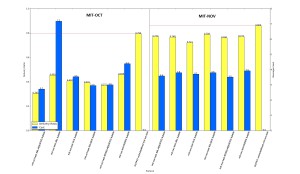

Minus 1 Community

Minus 15 Communities

Minus 30 Communities

Minus 40 Communities

As communities are removed, the graph becomes clearer, where 40 communities are removed, it becomes more obvious that there are distinct communities and the high degree nodes are more obvious.

eps and png files for other numbers removed

The goal is; given any cell tower id, we can identify which community it belongs to, and by extension, the ‘location’ the node is at.

An idea I have for finally making this data usable, so that we have distinct communities. Ignore the top N communities (e.g. 30). Order each community by summed weight of edges, then take the highest degree node, and assign it to the community it belongs to, that has the highest rank, then remove that node from further consideration. Continue until all nodes are assigned to a community. This may need some more thought.