MIT Contacts – Cell Tower stats

I processed the contact logs, rather than just the hop logs, and after ~6 hours it completed! Now all contacts in the whole MIT dataset have associated cell towers. If a cell tower could not be found for the contact duration, then a second search was done for a record of a cell tower finishing in the previous hour. The timestamps are preserved so that they can be excluded later. The following shows the stats for this data, in regards to cell towers.

Row Count: 114046 Both Have Cells: 28804 (25%) Neither have cells: 34587 (30%) One side has cells: 50655 (44%) From has cells: 30557 (27%) To has cells: 20098 (18%) Nodes share cell: 4045 (4%) First start time: Thu, 01 Jan 2004 04:03:17 +0000 Last end time: Thu, 05 May 2005 03:39:29 +0100

This means that out of 114046 contacts between 01 Jan 2004 and 05 May 2005, only 4045 nodes report at least one cell in common at time of contact, but 28804 report different cells, and a further 50655 report cells for at least one of the nodes. This means, that if we assume that a one sided cell sighting at time of contact determines a cell sighting for both nodes, then we have cell towers for ~73% of contacts.

The assumption is that if any of the cell towers seen in the contact period, then the nodes are considered to share a cell. However, this can be further refined if needs be, to be more strict, where a contact period is very long.

UPDATE:

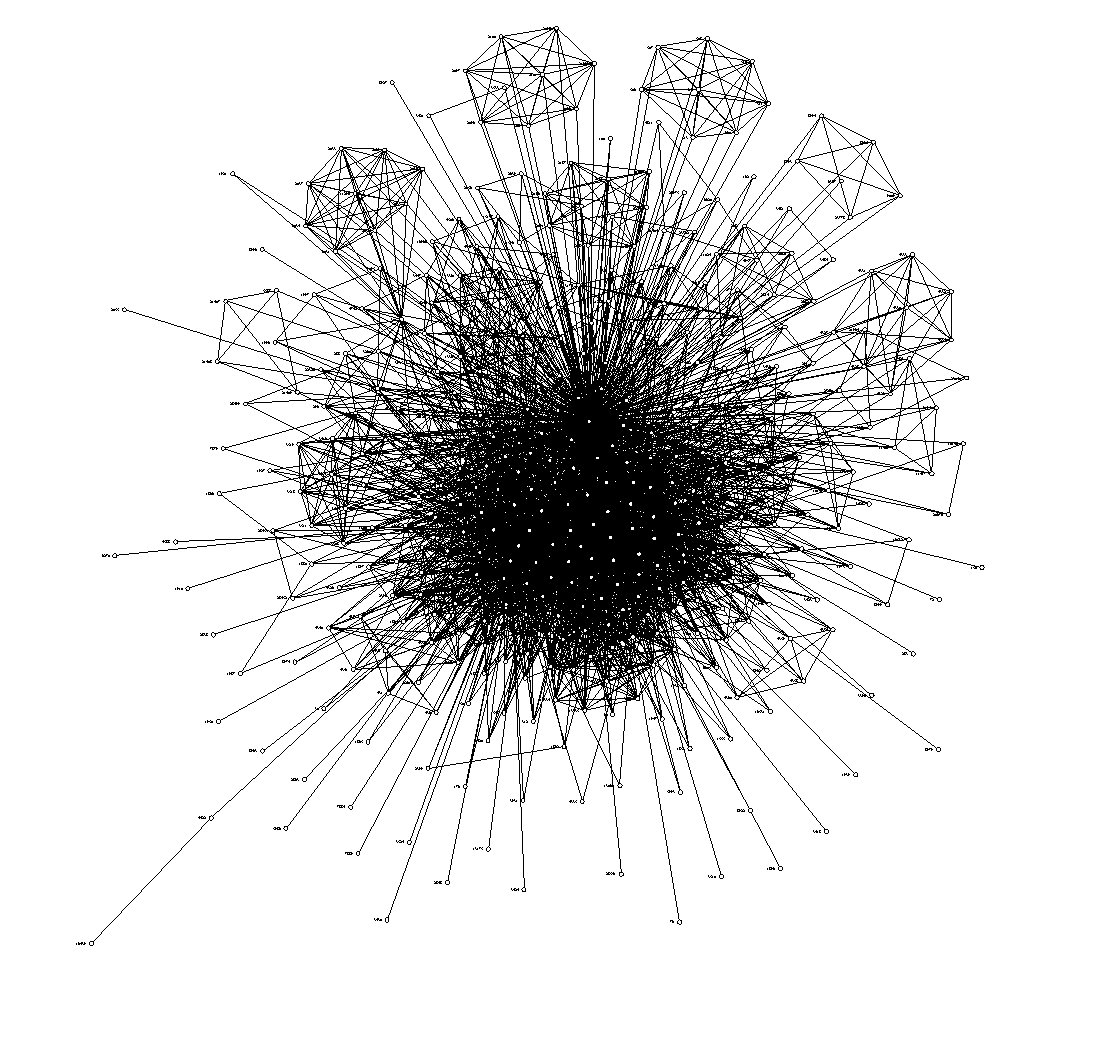

Below is a visualisation of the connected cell towers for MIT-Oct 2004, it it very confused and has a huge central mass, but there are clear connections of towers on the periphery. I think this can be further refined to be more strict about cell tower connections (as at the moment, the dataset contains more than 1 cell tower for any given contact period). Also, at the moment it links two cells even when two nodes are on contact for a long period of time, in which they could conceivably be moving together.

Cell towers where an edge is made when either node in a contact event has seen one of the cell towers.