Some new ideas

Met with Pádraig, results so far are not complete, so we still need to:

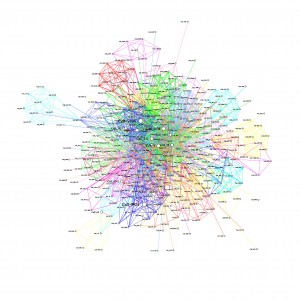

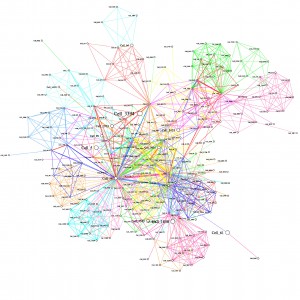

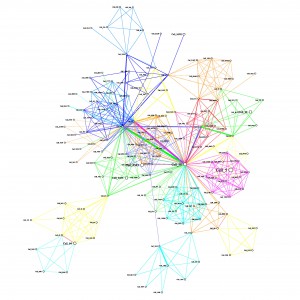

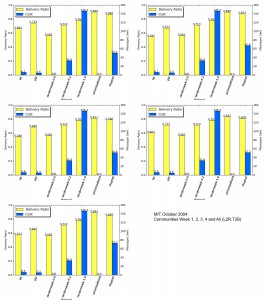

- Run routing based on ranked centrality only (i.e. the aggregate graph of all connections): Graham might have done this already. To give us a more complete picture of what routing towards the centre really looks like.

- Do more randomised ranking runs, to see of random can come up with better routing rank than LBR.

- Implement and test new LBR idea.

Next Step for LBR

Pádraig suggested a simple advancement for LBR:

Person A, has a message for Person C, and has encountered Person B. A has to work out whether to pass the message on or not. Each node has a probability matrix of visiting all locations at any time.

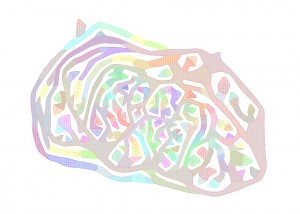

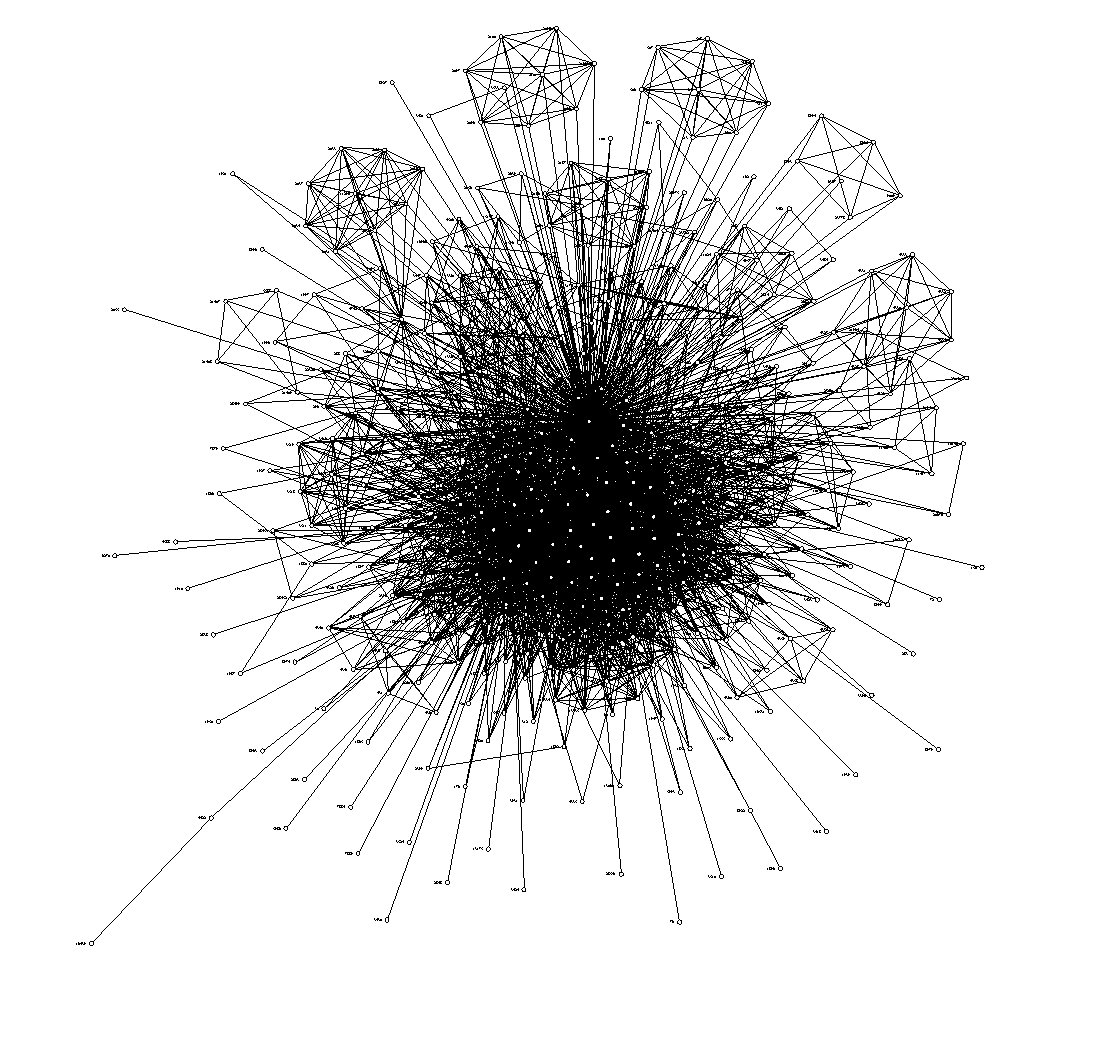

Probability matrix of nodes being at any given location

A makes his decision by calculating the dot product of his own locations against C’s locations, and comparing that to B’s calculation in relation to C. If the sum for the B.C is greater than A.C then A passes the message to B. The rationale being that when one encounters someone else, who is more likely to visit the same location as the destination person, then the message should be passed on, because they are more likely to see the other person.

There are some caveats….. TODO: buffer zone, future prediction, past history, recent(ness?), limited locations,

Some specific details/ideas/extensions to consider:

- We need to consider how these probability matrices will evolve over time. A nodes probability matrix will change when he/it visits new places, or alters existing mobility patterns. Initially, we can use the computed data, but more refined versions should exhibit a real-world scenario of unpredictable behaviour.

- Determine a good threshold to use, so that messages are not sent between people of very similar rank/score, the rationale being that there may not be a benefit in passing it on, and therefore try to reduce overhead.

- Limit the number of locations considered, to only those that are popular, this might boost the use of popular locations, in an attempt achieve a higher probability of a message being passed on.

- Consider a more sophisticated mechanism to predict co-location with the destination node, or a better conduit/carrier node, by predicting future interactions with nodes based on past history.

- It may also be important to show the possibility of real-world application, by implementing a scheme for automatic dissemination and update of the probability matrix using the network itself. (related to previous ideas about piggybacking meta-data using network/vector clocks, which themselves can be used as a source of routing metrics. e.g. recentness of contact, latency, update routes/speeds/times etc.)

Pádraig and I discussed the problems we may encounter in regards to peer review and justification of the work; in that the problem area is not well defined, and therefore we might have problems showing why this work is novel, and proving what our contributions are. To that end we need to explore the literature a little more, so we might be able to show a solid justification for the work, or alternatively, change our approach so it fits in better with other, better defined problems.

What sorts of messags are ‘delay tolerant’? Pádraig’s suggestion is that twitter messages, and facebook update messages might be delay tolerant, as a person may not need to receive all messages, and generally only want to get the latest updates, it does not matter if a few are lost along the way.

How do we define the urgency of messages, and the efficiency of the network? Perhaps one type of message can be delivered within 10 time periods, and still be considered to be relevant and within acceptable delivery time, but another message may need to be delivered within 1 time period, to ensure quality of service.

There is a categorisation issue too; where some messages can be considered one-to-one (direct messaging), some one-to-many (twitter update), many to many (local information) and many-to-one (sensor networks). We need to decide which of these we will consider. On this note, I said I would speak to Neil Cowzer, who is working on implicit group messaging, to see what his motivation is, and to see if he has a well defined problem space that he is tackling.

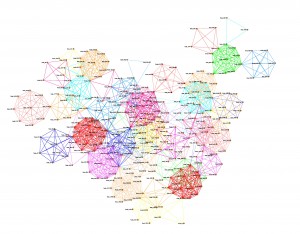

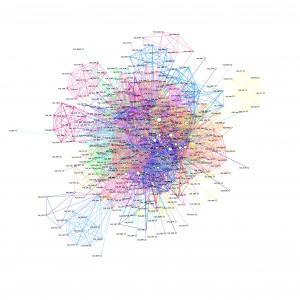

Another alternative that Pádraig suggested, was looking at social science approach, where we look at the heuristic approaches to routing in complex social networks. Pádraig’s suggestion was that on some networks, we might be able to apply certain routing techniques, which do not work on others. The contribution would be defining, categrorising and testing new and existing combinations of network types and routing mechanisms. This would be an interesting route to take, but would mean a step back in my research, as I would need to do reading into this area. This would link up well with the work of Stanley Milgram, Granovetter and Watts & Strogatz etc. So I should re-read some of this work, but more importantly, take a look at the biggest cited, citing documents, to see where research might be heading now.