It is important to make sure that each community finding algorithm (CFA) gets a chance to compete on a level playing field, so that they can be equally tested.

The dataset is MIT Reality Mining, which starts in August 200? and ends in May 200?.

The Bubble RAP algorithm uses k-KLIQUE for community finding. The graph contains the core nodes in the dataset, edges are created when a contact is made between two nodes, and labelled with the total time connected between the two nodes. Edges are removed when the total time connected is less than a defined threshold. Then it detects the communities using a k-CLIQUE algorithm, where k = 3. The threshold used by Graham is 2 days. It is 4.5 days in the BubbleRAP paper.

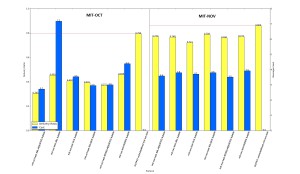

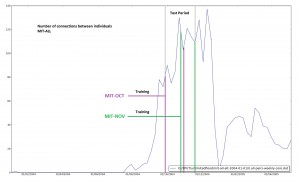

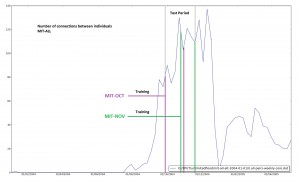

MIT-OCT has been used extensively by Graham, along with MIT-NOV to test his algorithms, so we will continue to use that time period for testing. However, we must make sure that there is sufficient time for all clustering algorithms to make a good effort at finding communities. I believe this was down to the fact that there was activity recorded a large number of users in the dataset for these time periods.

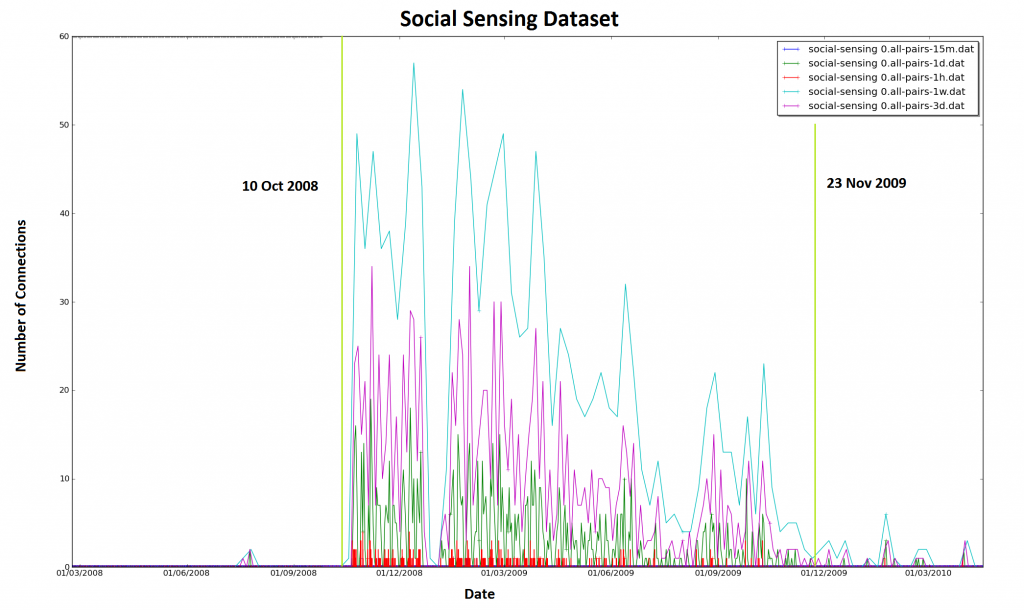

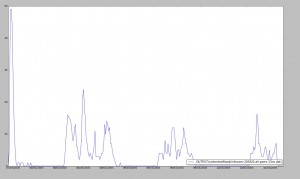

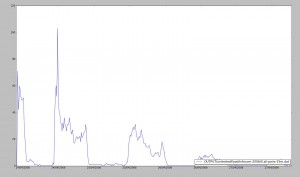

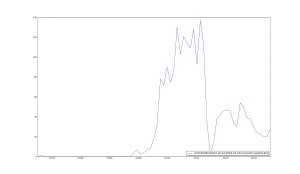

The figure below shows the average number of connections per week for the whole dataset.

The average number of connections (bluetooth contacts) per week in the MIT Dataset

A note about the MIT-OCT dataset: There are two groups of nodes in the dataset, those belonging to the CORE set, and the rest, which belong to the encompasing ALL set. A node is determined to be in the CORE set if it is part of the MIT Reality Mining study, and is reporting readings. A node that is not in the CORE set, but is in the ALL set, represents a device that has been spotted by a node in the CORE set, but that is not known about apart from it’s contact trace. These are normally considered separate to the routing process, as it would not be possible to communicate with that node.

So, for the first experiment, each algorithm will generate communities from 01 Aug 2004 until 27 Sep 2004, and 01 Aug 2004 until 18 Nov 2004. The test period will be 27 Sep 2004 to 25 Oct 2004 and 18 Nov 2004 to 15 Nov 2004.

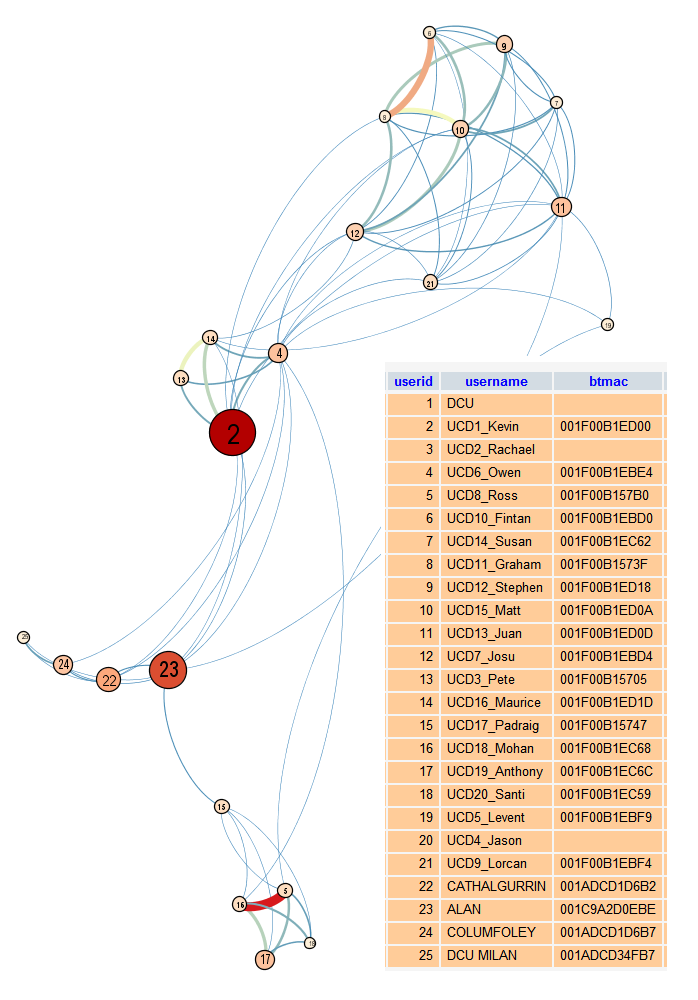

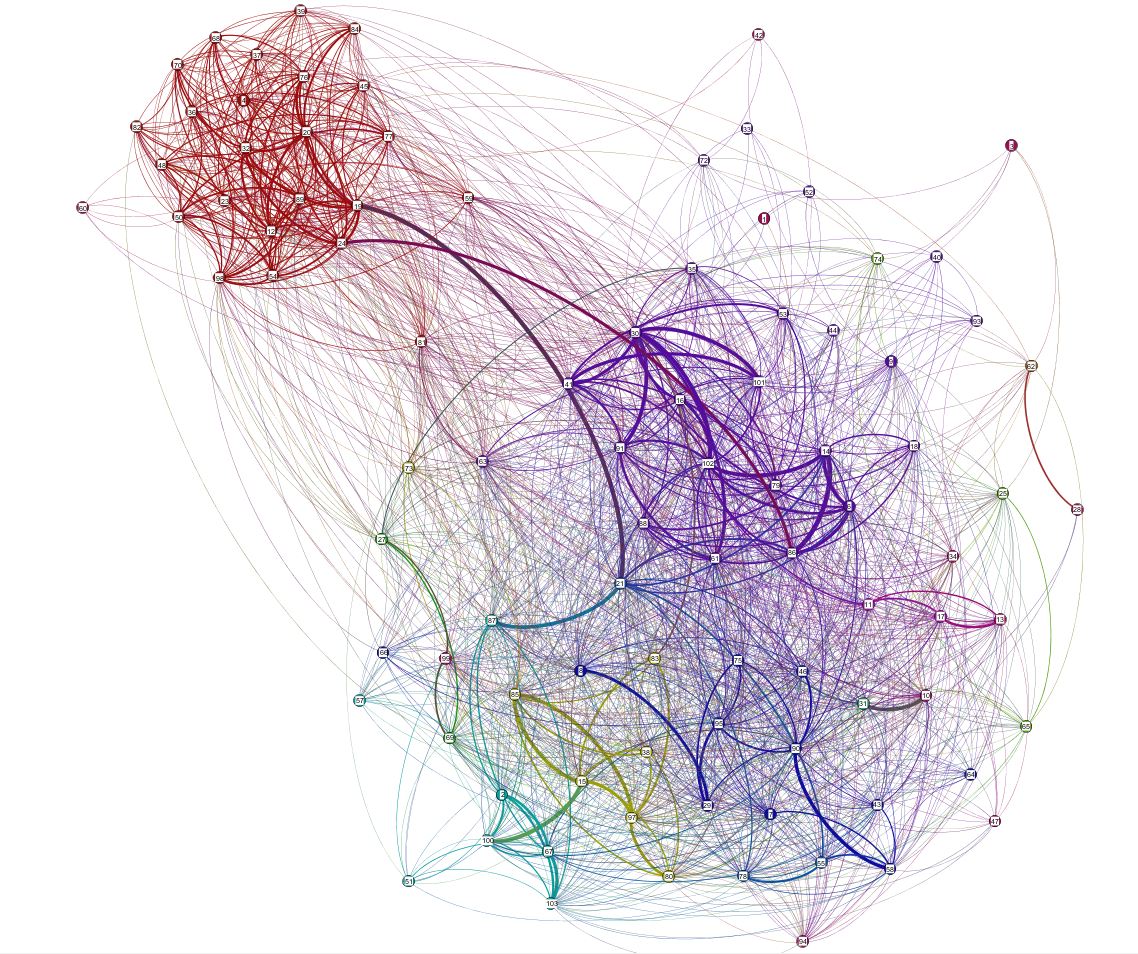

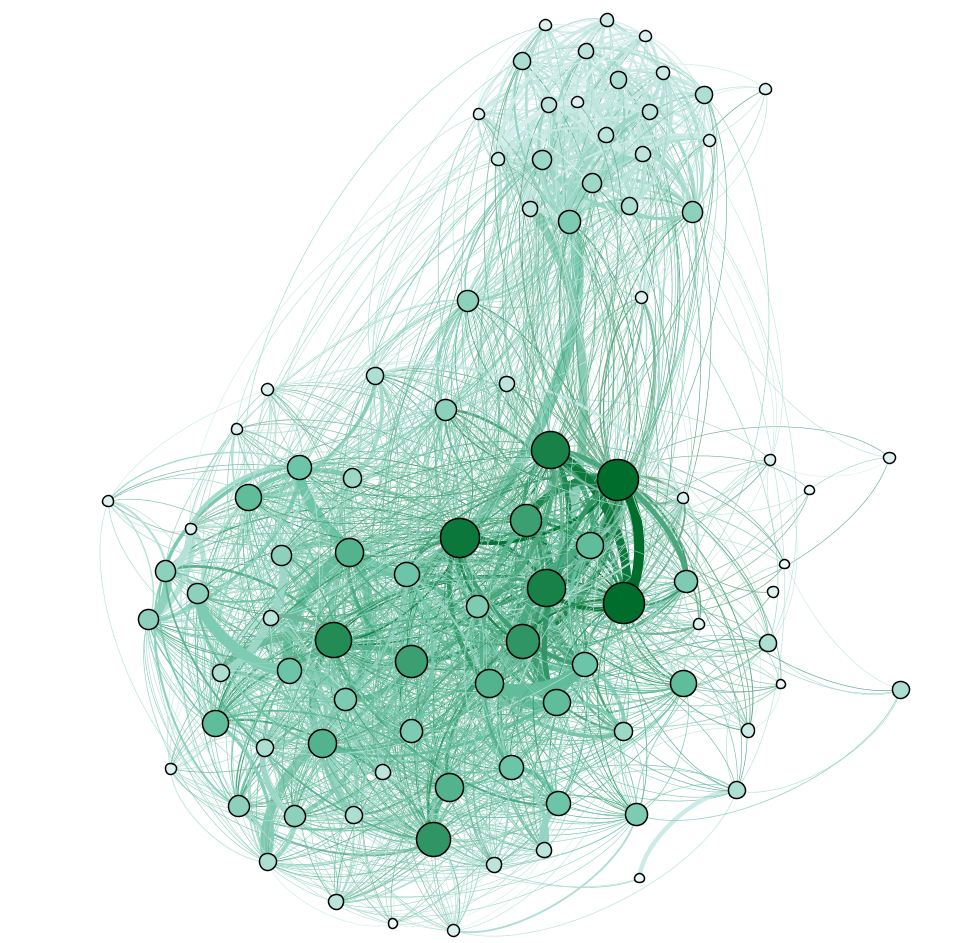

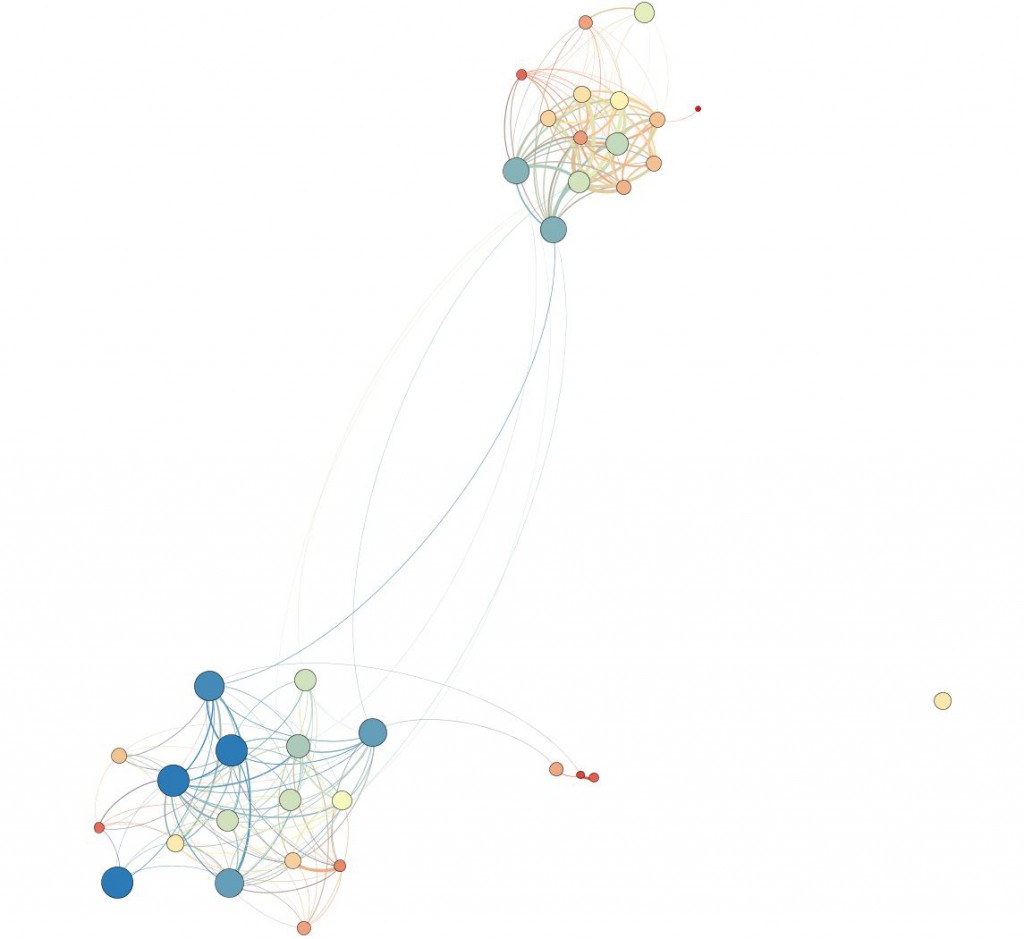

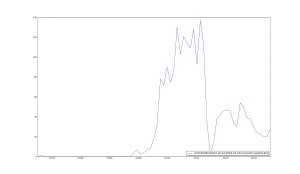

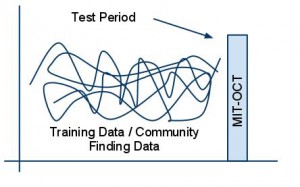

The image below shows the network generated in the MIT October training period. Some of the low weight (connected time) edges are removed for clarity. It appears to have three groups of nodes.

Visualisation of MIT OCT Training edge list. Edges are weighted by the amount of time nodes are connected, with low weight edges removed for clarity. Nodes are sized and coloured by degree.

Some options for community finding algorithms:

- Should the graph they all work on be limited by the threshold period?

- Justification for this is that BubbleRAP determines this to be important

- Whether or not to use CORE|ALL nodes

- If the CFAs cannot successfully find communities for the training period, we will need to experiment with time periods that suit all algorithms.

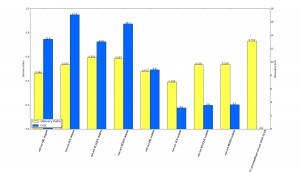

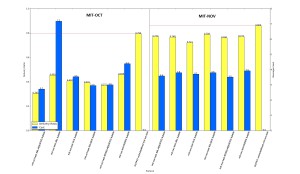

The table below shows the results of community finding on the training datsets mit-oct and mit-nov, apart from KCLIQUE which performs very poorly before the end of December for any threshold value, so for the test period, 01 Aug 2004 to 31 Dec 2004 was used to calculate the communities.

| Dataset |

# Communities |

Average Size |

| MIT-OCT-TRAINING – 01 Aug 2004 to 27 Sep 2004 |

| KCLIQUE t=2days – to 31 Dec 2004 |

8 |

7 |

| MOSES |

7 |

35 |

| MOSES adjusted graph * |

1 |

12 |

| GCE |

4 |

60 |

| ABL adjusted graph * |

11 |

3 |

| ABL |

113 |

7 |

| MIT-NOV-TRAINING – 01 Aug 2004 to 18 November 2004 |

| KCLIQUE t=2days – to 31 Dec 2004 |

8 |

7 |

| MOSES |

8 |

42 |

| MOSES adjusted graph * |

3 |

7 |

| GCE |

2 |

51 |

| ABL adjusted graph * |

41 |

3 |

| ABL |

99 |

8 |

* adjusted graph means that some edges were removed from the edge list before passing to the algorithm, in this case, edges where the total connected time of nodes was less than 1 day.

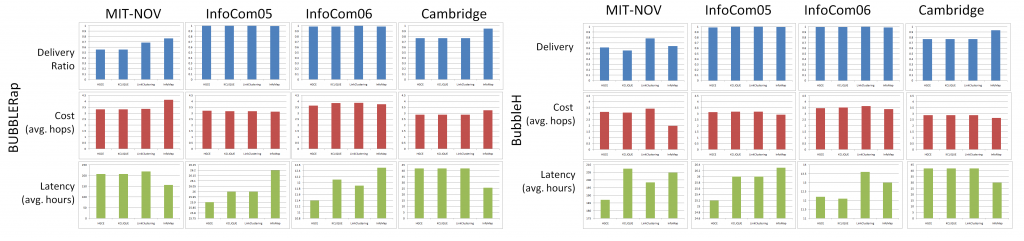

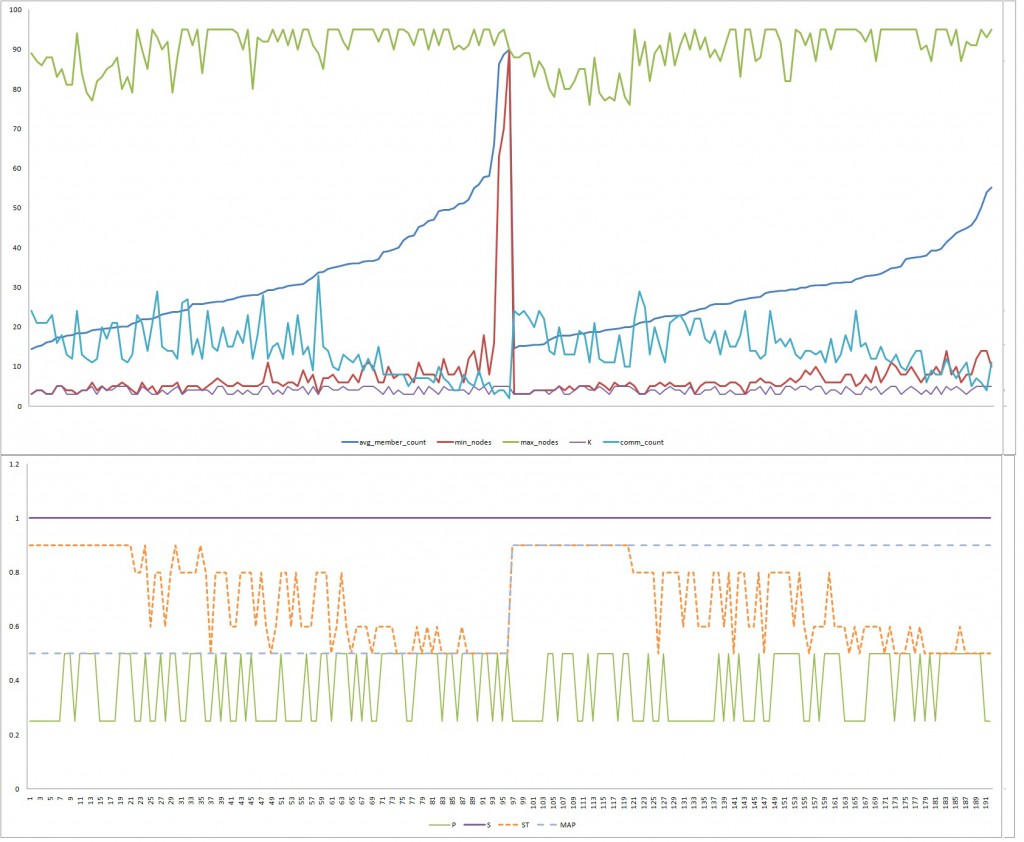

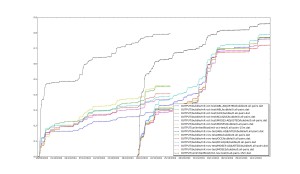

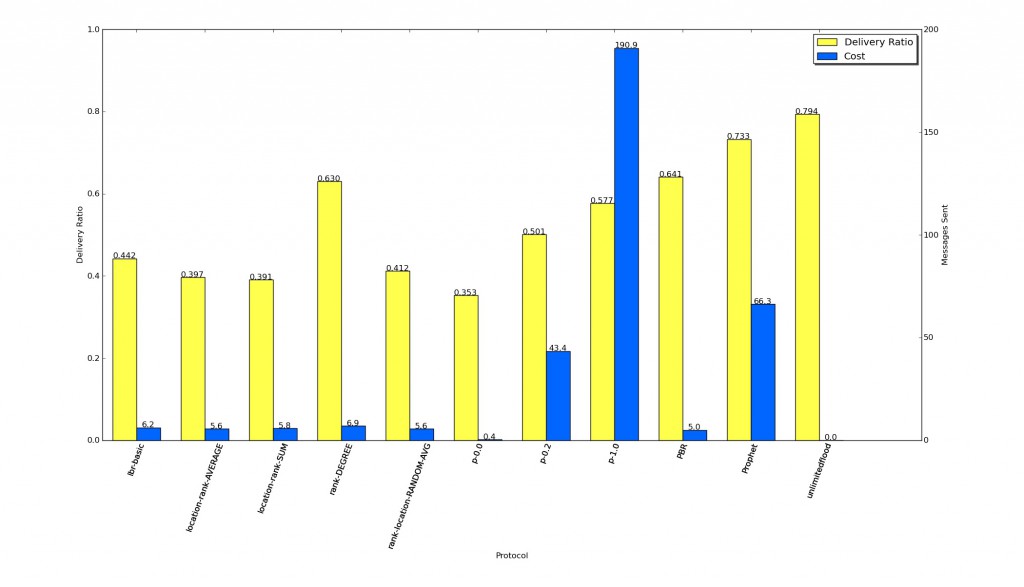

Bubble results for test period Oct and Nov 2004, with training periods 01 Aug 2004 to 27 Sep 2004, and 18 Oct 2004 respectively.

Bubble results for test period Oct and Nov 2004, with training periods 01 Aug 2004 to 27 Sep 2004, and 18 Oct 2004 respectively.

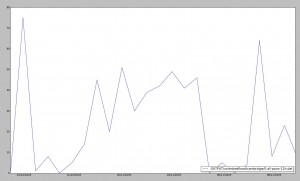

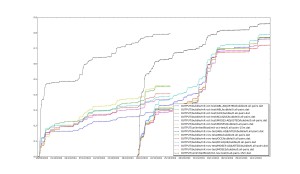

Average weekly connections, showing training period and test period for MIT-OCT and MIT-NOV