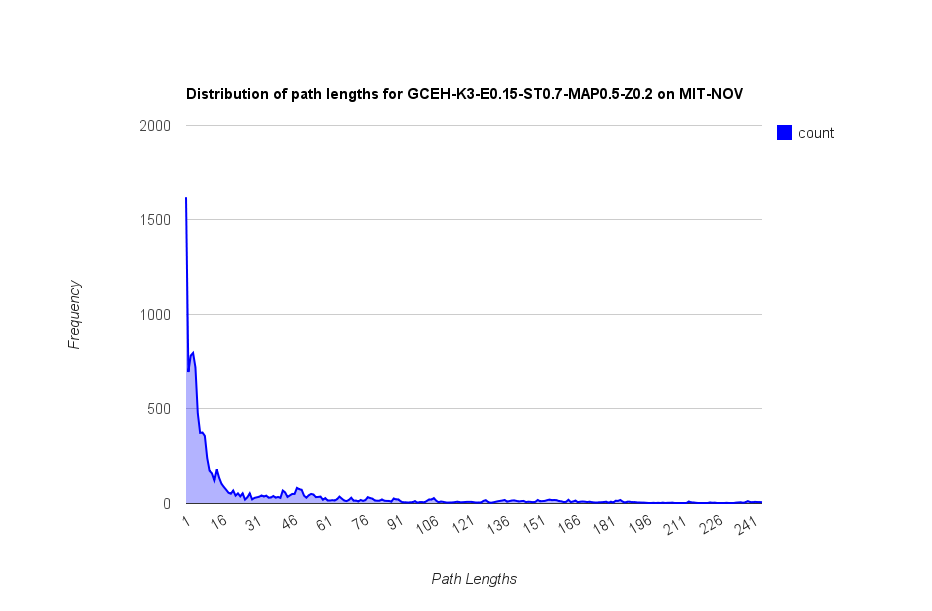

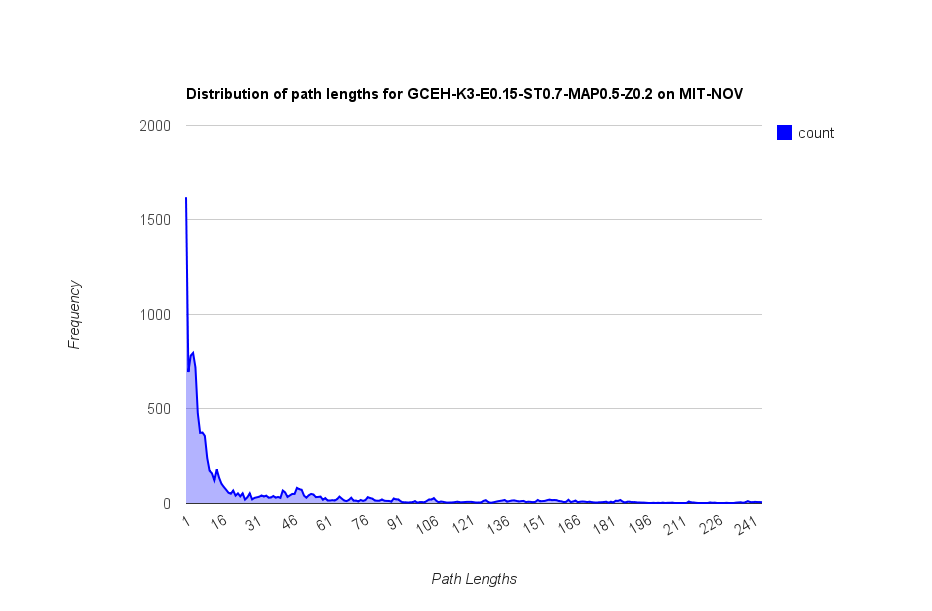

In the recent paper that we submitted to SMW2011, we showed that we could increase the delivery ratio using overlapping hierarchical clustering, however, we also identified that the cost involved was much higher than we expected, up to 245 hops in some cases. To find out what was happening, we checked the behaviour of the best performing parameters (GCEH-K3-E0.15-ST0.7-MAP0.5-Z0.2) by recording the route of each message along with some hints as to why the message was being passed at that point. Hop Stats for GCEH-K3-E0.15-ST0.7-MAP0.5-Z0.2 (11MB uncompressed).

This is the current algorithm:

[expand title=”click to view algorithm”]

for (BubbleHMessage message : my_buffer) {

/**

* Identify smallest community that Self and Destination share =

* Bridging Community

* */

int my_bridge_community = BubbleHierarchyOracle.bridgeCommunity(my_node.getID(), message.dest, my_properties);

// if encountered node is destination

if (process.getNode().getID() == message.dest) {

// pass it on to them.

destination_met++;

toSend.add(message);

message.setHopCode("DM");

} else {

int remote_bridge = BubbleHierarchyOracle.bridgeCommunity(process.getNode().getID(), message.dest, my_properties);

if (BubbleHierarchyOracle.isBetter(remote_bridge, my_bridge_community)) {

// if P is in community with message.dest, that is smaller

// than BC

// pass message

message.setHopCode(remote_bridge +"-isBetter-" + my_bridge_community+ ":" + BubbleHierarchyOracle.whyIsBetter(remote_bridge, my_bridge_community));

toSend.add(message);

smaller_community_found++;

} else if (process.getCommunities().contains(my_bridge_community)) {// if both nodes are in the bridge community

// if the rank of the encountered node is higher, pass the message

if (process.getlocalRank(my_bridge_community) > getlocalRank(my_bridge_community)) {

// pass message

toSend.add(message);

message.setHopCode("RANKBC-" + my_bridge_community);

bridge_community_ranking_message_passed++;

}

} else {

// process is not destination, is not in a smaller community

// with the destination, and is not in the bridge community,

// therefore we do not pass the message to it

}

}

}

[/expand]

The isBetter function:

[expand title=”click to view isBetter function”]

public static boolean isBetter(int candidate_bridgeCommunity, int test_bridgeCommunity) {

if(candidate_bridgeCommunity == -1 && test_bridgeCommunity == -1){

// neither have a shared community

return false;

}else if((candidate_bridgeCommunity == -1) && (test_bridgeCommunity >= 0)){

// candidate shares a community with dest

return false;

}else if((candidate_bridgeCommunity >= 0) && (test_bridgeCommunity == -1)){

// current shares a community, but candidate does not

return true;

}else if(heirarachy.level(candidate_bridgeCommunity) > heirarachy.level(test_bridgeCommunity)){

// candidate has a higher level

return true;

}else if (heirarachy.members(candidate_bridgeCommunity).length < heirarachy.members(test_bridgeCommunity).length){

// candidate has less members

return true;

}else{

return false;

}

}

[/expand]

Some information about the run:

Log first ran in simulator: 2004-12-09_23:57:24

Log Filename: [...]0.hop-stats.dat

Mesages: 10506

Hops: 276313

Avg: 26

Max Hop Count: 245

Earliest Hop: Wed, 10 Nov 2004 00:00:00 +0000

Latest Hop: Thu, 09 Dec 2004 23:13:29 +0000

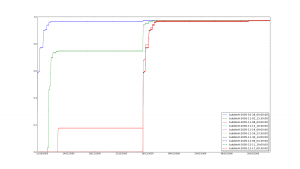

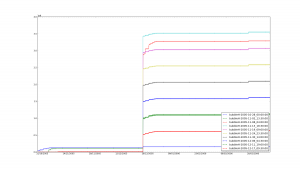

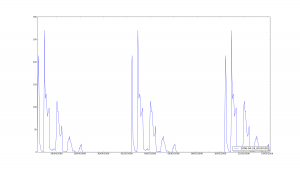

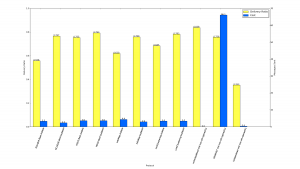

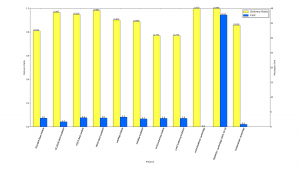

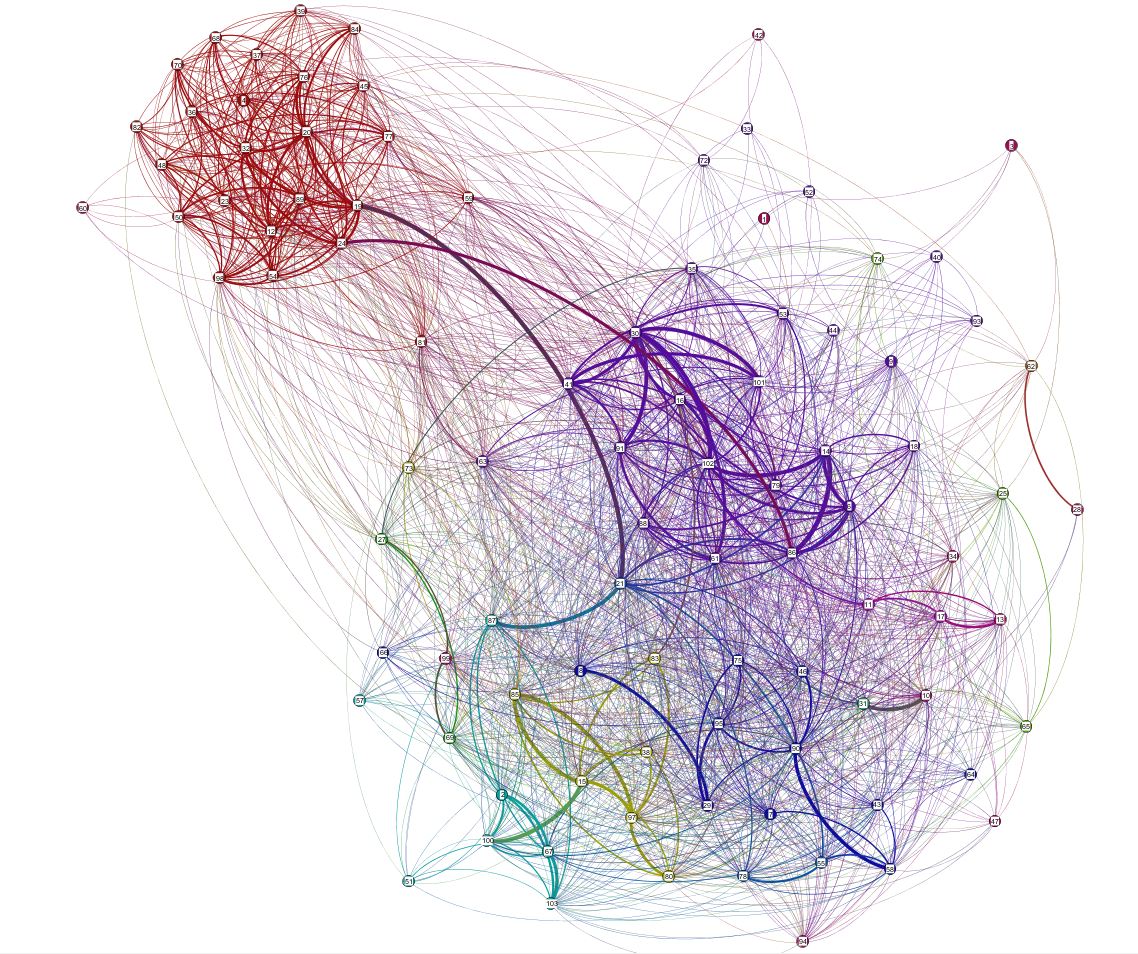

- Distribution of path lengths for GCEH-K3-E0.15-ST0.7-MAP0.5-Z0.2 using MIT-NOV dataset

Community Structure:

[expand title=”click view community structure”]

0(95)

|

- 1(95)

|

- 2(33)

|

- 3(30)

|

- 4(33)

|

- 5(31)

|

- 6(20)

| |

| - 8(7)

|

- 7(12)

|

- 9(15)

|

- 10(12)

| |

| - 11(5)

| |

| - 12(5)

|

- 13(4)

|

- 14(24)

|

- 15(15)

|

- 16(11)

|

- 17(49)

| |

| - 18(11)

|

- 19(41)

| |

| - 20(14)

|

- 21(4)

|

- 22(11)

|

- 23(5)

|

- 24(45)

[/expand]

Community Membership:

[expand title=”click to view”]

# options

# {'phi': 0.25, 'threads': 5, 'max_times_spoken_for': 1, 'minCliqueSize': 3, 'num_realizations': 100, 'epsilon': 0.14999999999999999, 'perturb_alpha': 0.20000000000000001, 'minCliqueEdgeWeight': 0.0, 'similarity_threshold': 0.69999999999999996, 'outfile': None, 'min_appearance_prop': 0.5, 'intNodeIDs': False, 'file': None, 'alpha': 1.0}

# ForceGlobalParent:true

# community_id-parent_id: members...n

0--1: 1 2 3 4 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 23 24 25 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 50 51 52 53 54 55 57 58 59 60 61 62 63 64 65 66 67 68 69 70 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 93 94 95 97 98 99 100 101 102 103

1-0: 1 2 3 4 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 23 24 25 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 50 51 52 53 54 55 57 58 59 60 61 62 63 64 65 66 67 68 69 70 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 93 94 95 97 98 99 100 101 102 103

2-1: 6 8 10 11 14 15 16 17 18 21 29 30 31 38 41 43 46 55 61 63 79 80 83 85 86 88 90 91 94 95 97 101 102

3-1: 6 10 11 14 15 16 17 18 21 30 31 38 41 43 55 61 69 79 80 83 85 86 88 90 91 94 95 97 101 102

4-1: 6 7 8 10 14 15 16 17 18 21 29 30 31 41 46 55 58 61 75 78 79 80 83 85 86 88 90 91 94 95 97 101 102

5-1: 6 10 11 13 14 15 16 17 18 27 30 31 35 41 46 53 61 63 69 78 79 83 85 86 88 91 95 97 99 101 102

6-1: 3 9 10 16 25 30 31 35 41 53 61 65 72 74 79 88 91 95 101 102

7-1: 6 10 14 18 25 31 62 65 83 86 88 102

8-6: 3 25 41 53 65 72 74

9-1: 6 14 16 30 41 44 53 61 73 79 83 91 95 101 102

10-1: 9 15 16 30 41 42 52 61 77 91 101 102

11-10: 9 30 42 77 101

12-10: 9 30 41 42 101

13-1: 1 30 83 101

14-1: 7 15 16 30 35 38 41 46 53 58 61 69 79 80 83 85 90 91 95 97 100 101 102 103

15-1: 10 16 30 41 53 61 73 74 79 83 91 95 99 101 102

16-1: 6 11 13 14 17 30 47 58 86 90 95

17-1: 1 2 3 7 8 10 15 21 25 27 29 31 34 38 42 43 46 47 51 52 55 57 58 61 64 65 66 67 69 72 73 74 75 78 79 80 83 85 87 88 90 93 94 95 97 98 99 100 103

18-17: 8 10 15 27 66 80 83 85 97 98 100

19-1: 1 2 6 7 8 14 15 18 21 29 33 34 38 40 43 46 47 51 55 57 58 64 66 67 72 73 75 78 80 83 85 86 87 88 90 94 95 97 100 102 103

20-19: 2 15 38 51 67 80 85 87 88 95 97 100 102 103

21-1: 28 58 90 103

22-1: 23 30 39 41 59 63 79 81 91 101 102

23-1: 45 69 72 88 99

24-1: 3 4 9 12 19 20 23 24 25 28 32 33 34 36 37 39 42 44 45 48 50 51 52 54 57 59 60 62 63 64 65 66 68 70 72 73 74 76 77 81 82 84 89 94 98

[/expand]

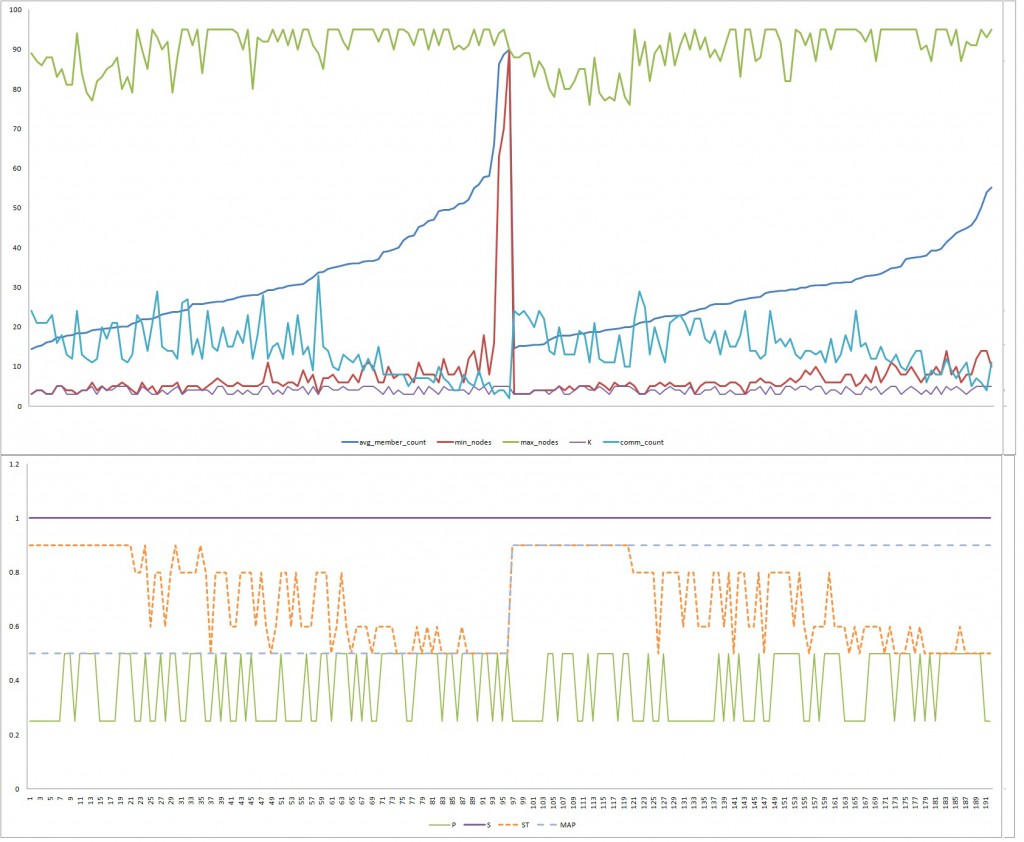

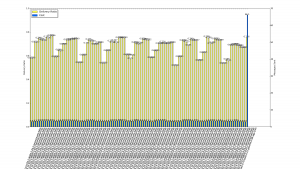

As expected there are a high number of messages that arrive within a small number of hops, which is highly desirable, however there is a long tail that extends up to 245 hops, which was not expected.

An annotated log file excerpt for message pair 85:3 (one of the longest paths) is shown below. Note that this message is live, which means it has not been delivered. Analysis of this shows a large number of hops between the same nodes, and the same communities.

Log file Exerpt

Key:

n-isBetter-n:Reason = the first (candidate) community is deemed

better than the last (test), for the reason given.

RANKBC-n = the rank of the two nodes in the given community n is

compared, and the encountered node scored higher

DM = destination met, so the message was passed

[expand title=”click to view log”]

Message ID: 8060

From 85 to 3

Status: live

Passed to node 85 at 2004-11-10 00:00:00 reason: INITIATED

Passed to node 16 at 2004-11-10 09:22:09 reason: 6-isBetter-17:BETTER-CANDIDATE-LESS-MEMBERS

Passed to node 102 at 2004-11-10 09:56:10 reason: RANKBC-6

Passed to node 41 at 2004-11-10 12:04:45 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 6 at 2004-11-10 14:36:56 reason: RANKBC-8

Passed to node 90 at 2004-11-10 14:40:55 reason: 17-isBetter-1:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 95 at 2004-11-10 15:23:10 reason: 6-isBetter-17:BETTER-CANDIDATE-LESS-MEMBERS

Passed to node 30 at 2004-11-10 15:26:52 reason: RANKBC-6

Passed to node 102 at 2004-11-10 15:40:22 reason: RANKBC-6

Passed to node 41 at 2004-11-10 18:06:57 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 90 at 2004-11-10 18:19:24 reason: RANKBC-8

Passed to node 95 at 2004-11-11 09:47:52 reason: 6-isBetter-17:BETTER-CANDIDATE-LESS-MEMBERS

Passed to node 30 at 2004-11-11 11:46:14 reason: RANKBC-6

Passed to node 102 at 2004-11-11 12:00:38 reason: RANKBC-6

Passed to node 41 at 2004-11-11 12:55:31 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-11 14:05:32 reason: RANKBC-8

Passed to node 41 at 2004-11-11 14:38:50 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-11 15:11:56 reason: RANKBC-8

Passed to node 41 at 2004-11-11 16:37:36 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-11 17:30:20 reason: RANKBC-8

Passed to node 41 at 2004-11-11 17:56:34 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 6 at 2004-11-11 18:18:04 reason: RANKBC-8

Passed to node 30 at 2004-11-15 09:20:19 reason: 6-isBetter-1:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 101 at 2004-11-15 09:46:13 reason: RANKBC-6

Passed to node 41 at 2004-11-15 09:57:18 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 11 at 2004-11-15 12:47:19 reason: RANKBC-8

Passed to node 88 at 2004-11-15 13:41:22 reason: 6-isBetter-1:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 38 at 2004-11-15 13:47:35 reason: RANKBC-6

Passed to node 79 at 2004-11-15 14:41:15 reason: 6-isBetter-17:BETTER-CANDIDATE-LESS-MEMBERS

Passed to node 91 at 2004-11-15 14:52:48 reason: RANKBC-6

Passed to node 101 at 2004-11-15 14:58:03 reason: RANKBC-6

Passed to node 102 at 2004-11-15 14:58:48 reason: RANKBC-6

Passed to node 41 at 2004-11-15 15:26:31 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-15 16:04:25 reason: RANKBC-8

Passed to node 101 at 2004-11-15 16:08:44 reason: RANKBC-6

Passed to node 53 at 2004-11-15 16:14:16 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 13 at 2004-11-15 16:25:03 reason: RANKBC-8

Passed to node 91 at 2004-11-15 16:29:28 reason: 6-isBetter-1:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 53 at 2004-11-15 16:30:40 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-15 16:41:37 reason: RANKBC-8

Passed to node 41 at 2004-11-15 16:51:04 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-15 17:31:42 reason: RANKBC-8

Passed to node 41 at 2004-11-15 18:13:21 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-16 09:18:35 reason: RANKBC-8

Passed to node 41 at 2004-11-16 09:24:56 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 6 at 2004-11-16 11:01:28 reason: RANKBC-8

Passed to node 30 at 2004-11-16 11:01:30 reason: 6-isBetter-1:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 101 at 2004-11-16 11:58:26 reason: RANKBC-6

Passed to node 102 at 2004-11-16 12:09:36 reason: RANKBC-6

Passed to node 41 at 2004-11-16 12:15:36 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 6 at 2004-11-16 13:35:34 reason: RANKBC-8

Passed to node 102 at 2004-11-16 14:12:41 reason: 6-isBetter-1:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 41 at 2004-11-16 14:47:50 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-16 14:49:36 reason: RANKBC-8

Passed to node 41 at 2004-11-16 14:49:53 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-16 15:06:52 reason: RANKBC-8

Passed to node 101 at 2004-11-16 15:13:25 reason: RANKBC-6

Passed to node 53 at 2004-11-16 15:41:12 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 13 at 2004-11-16 15:50:43 reason: RANKBC-8

Passed to node 102 at 2004-11-16 15:55:57 reason: 6-isBetter-1:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 53 at 2004-11-16 16:44:56 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 14 at 2004-11-16 16:46:28 reason: RANKBC-8

Passed to node 79 at 2004-11-16 16:52:06 reason: 6-isBetter-1:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 61 at 2004-11-16 16:53:08 reason: RANKBC-6

Passed to node 58 at 2004-11-16 17:20:54 reason: RANKBC-6

Passed to node 31 at 2004-11-16 17:21:37 reason: 6-isBetter-17:BETTER-CANDIDATE-LESS-MEMBERS

Passed to node 90 at 2004-11-16 18:52:52 reason: RANKBC-6

Passed to node 101 at 2004-11-17 12:32:06 reason: 6-isBetter-17:BETTER-CANDIDATE-LESS-MEMBERS

Passed to node 41 at 2004-11-17 12:37:00 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 30 at 2004-11-17 12:51:31 reason: RANKBC-8

Passed to node 102 at 2004-11-17 12:53:32 reason: RANKBC-6

Passed to node 41 at 2004-11-17 12:59:28 reason: 8-isBetter-6:BETTER-CANDIDATE-HAS-HIGHER-LEVEL-DEPTH

Passed to node 17 at 2004-11-17 13:10:13 reason: RANKBC-8

... etc.

[/expand]

In the implementation, the way that the ‘best’ bridging community is found, involves a choice when there are two or more candidates for the best BC for a node pair. This choice is naive because the choice is made by picking the last one in the list.

There seems to be a number of problems when considering whether a given best bridging community for one node is better than another. When considering this choice, the depth of the community in the structure is compared, this is also a naive decision, as the hierarchy levels in the structure do not relate to the importance of the community, as the depth is not governed by any metric saying how deep a community is, only that it has a parent, and perhaps some children. However, the size of the communities is still perhaps a good metric to use.

There will always be a bridging community for every node pair, as there is one global parent community, this means the the global community is treated like a local community. This should not be a big problem, as it pushes messages to the top when there is no better community to be found, these messages should soon find a node with a better community than the global one.

A couple of solutions to this are:

- compare all best communities for a node/message when comparing two nodes

- store a best-so-far community with the message, and only pass it on to nodes with a better one.

- stop using any local/global ranking, and consider only community best-ness

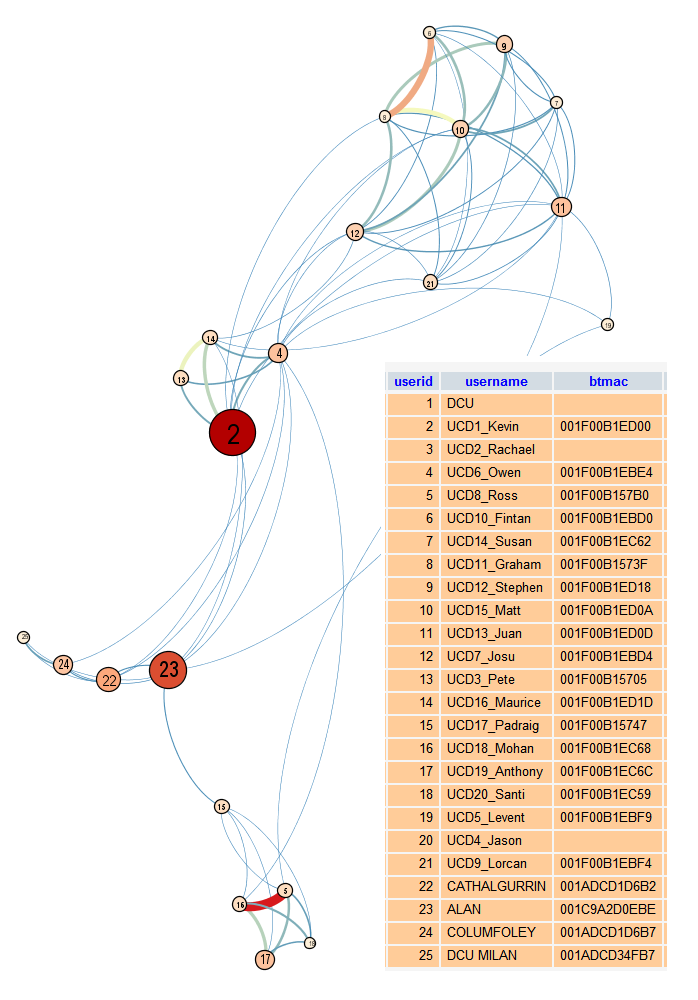

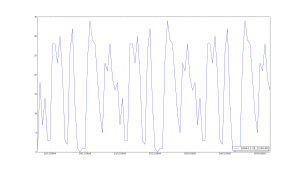

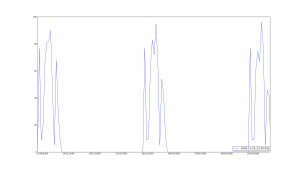

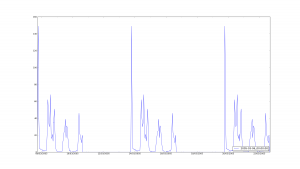

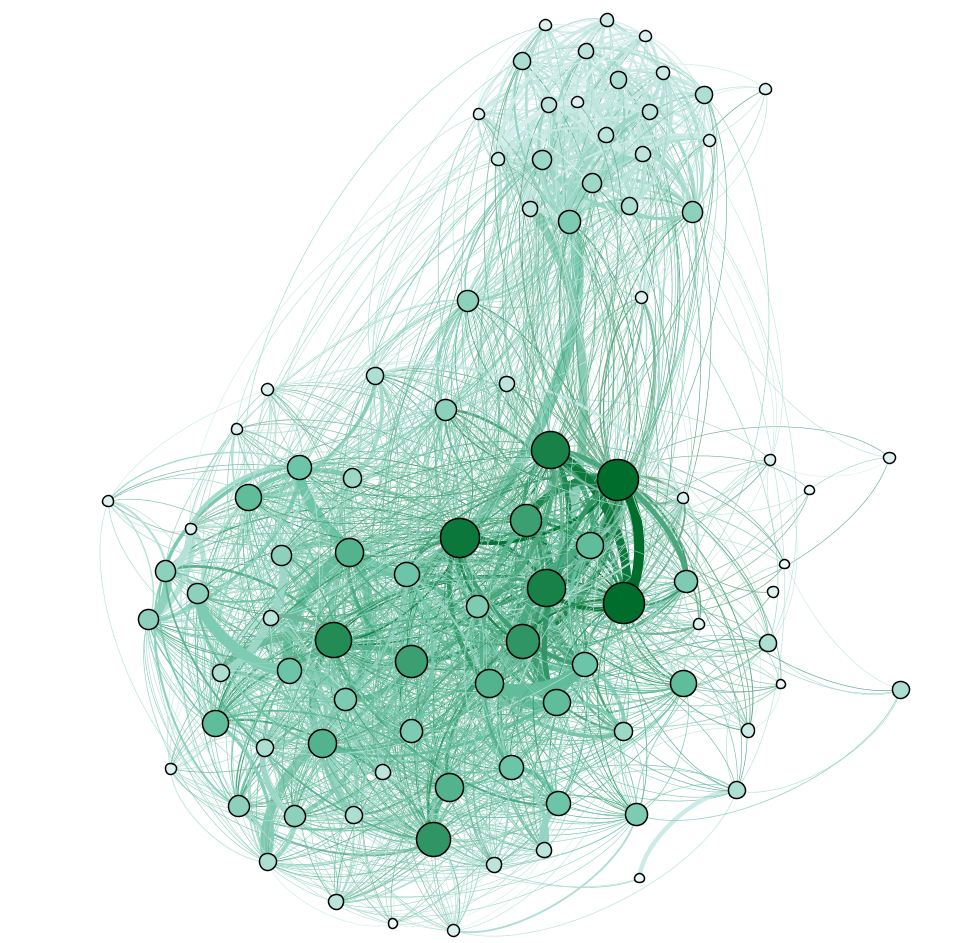

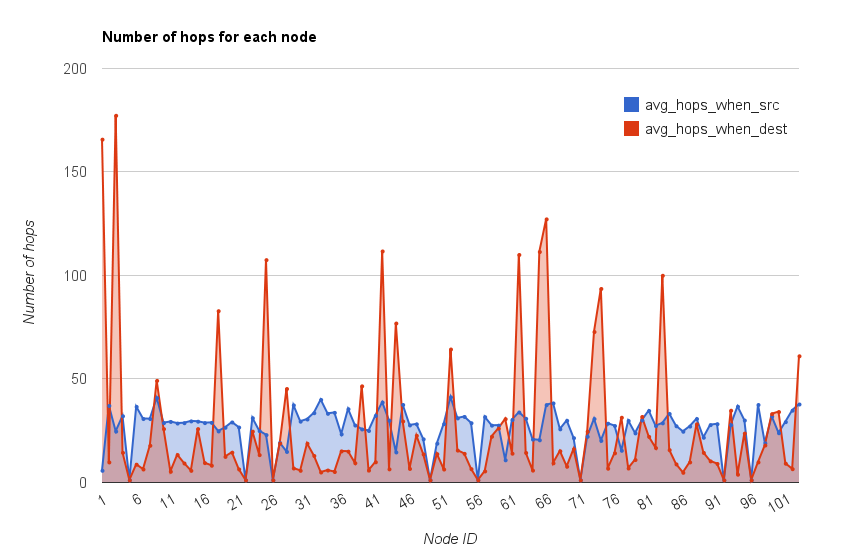

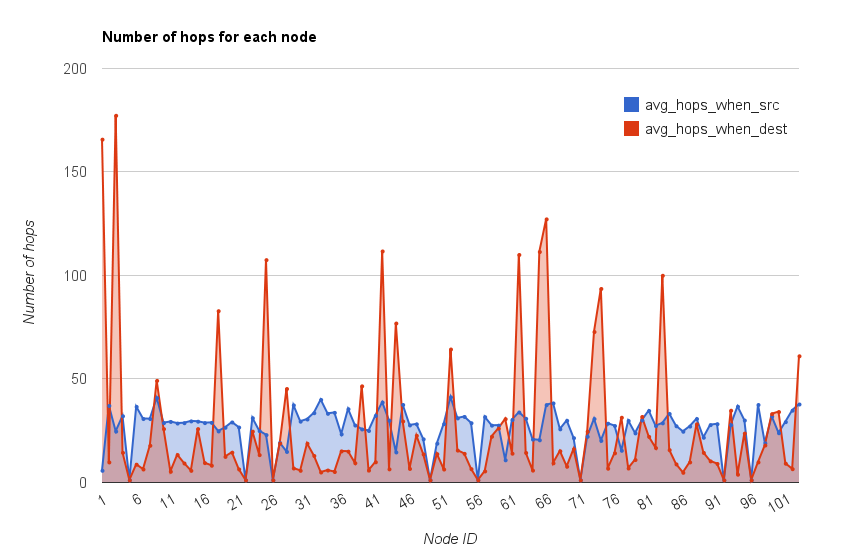

Pádraig suggested checking to see whether it is particular destination nodes causing the high hop counts, the graphic below illustrates the average hop lengths for each node, both when the node is the source and when the node is the destination. The data table is also given, which also includes the standard deviation.

Average hop lengths by node where the node is the source and where the node is the destination

Data table:

[expand title=”click to view data”]

| node |

avg_hops_when_src |

stddev |

avg_hops_when_dest |

stddev |

| 1 |

5.65 |

3.06 |

165.62 |

56.67 |

| 2 |

36.99 |

48.48 |

9.68 |

12.99 |

| 3 |

24.58 |

35.35 |

177.15 |

90.16 |

| 4 |

31.98 |

40.29 |

14.3 |

18.24 |

| 5 |

1 |

0 |

1 |

0 |

| 6 |

36.5 |

48.97 |

8.59 |

6.47 |

| 7 |

30.65 |

50 |

6.27 |

10.11 |

| 8 |

30.64 |

51.51 |

17.74 |

13.27 |

| 9 |

40.73 |

49.72 |

49.09 |

34.73 |

| 10 |

28.68 |

50.77 |

25.77 |

21.38 |

| 11 |

29.25 |

51.39 |

5.19 |

3.42 |

| 12 |

28.47 |

37.03 |

13.25 |

17.61 |

| 13 |

28.65 |

48.69 |

9.17 |

9.79 |

| 14 |

29.51 |

48.44 |

5.63 |

4.58 |

| 15 |

29.37 |

49.2 |

25.79 |

23.91 |

| 16 |

28.68 |

50.36 |

9.35 |

7.35 |

| 17 |

28.81 |

50.53 |

8.09 |

10.76 |

| 18 |

24.65 |

35.73 |

82.75 |

35.73 |

| 19 |

26.43 |

49.7 |

12.36 |

16.71 |

| 20 |

28.99 |

47.09 |

14.4 |

17.78 |

| 21 |

26.54 |

49.97 |

6.3 |

9.82 |

| 22 |

1 |

0 |

1 |

0 |

| 23 |

31.04 |

46.51 |

24.57 |

30.6 |

| 24 |

24.81 |

44.58 |

13.21 |

17.19 |

| 25 |

22.88 |

40.02 |

107.42 |

63.14 |

| 26 |

1 |

0 |

1 |

0 |

| 27 |

19.05 |

33.76 |

18.77 |

12.32 |

| 28 |

14.76 |

31.24 |

45.16 |

26.61 |

| 29 |

37.24 |

46.37 |

6.75 |

9.76 |

| 30 |

29.38 |

49.53 |

5.64 |

3.51 |

| 31 |

30.43 |

49.31 |

18.72 |

10.58 |

| 32 |

33.48 |

46 |

12.8 |

17.24 |

| 33 |

39.87 |

50.71 |

4.82 |

3.3 |

| 34 |

33.17 |

40.86 |

5.78 |

3.49 |

| 35 |

33.63 |

45.75 |

5.06 |

2.24 |

| 36 |

23.25 |

40.12 |

15.01 |

18.54 |

| 37 |

35.37 |

45.88 |

14.89 |

18.24 |

| 38 |

27.52 |

43.52 |

9.3 |

9.72 |

| 39 |

25.62 |

39.24 |

46.45 |

29.91 |

| 40 |

24.88 |

36.34 |

5.75 |

3.27 |

| 41 |

32.17 |

52.09 |

9.75 |

5.69 |

| 42 |

38.6 |

48.48 |

111.61 |

61.11 |

| 43 |

29.83 |

51.16 |

6.34 |

9.82 |

| 44 |

14.51 |

16.61 |

76.79 |

37.2 |

| 45 |

37.45 |

43.78 |

29.45 |

24.56 |

| 46 |

27.56 |

48.06 |

6.54 |

9.87 |

| 47 |

28.09 |

43.68 |

22.54 |

19.48 |

| 48 |

20.84 |

33.7 |

13.55 |

17.73 |

| 49 |

1 |

0 |

1 |

0 |

| 50 |

18.75 |

34.44 |

13.67 |

17.81 |

| 51 |

28.18 |

50.56 |

6.25 |

4.93 |

| 52 |

41.13 |

49.35 |

64.24 |

34.58 |

| 53 |

30.95 |

47.38 |

15.41 |

13.87 |

| 54 |

31.66 |

46.03 |

13.83 |

17.66 |

| 55 |

28.63 |

44.23 |

6.44 |

9.57 |

| 56 |

1 |

0 |

1 |

0 |

| 57 |

31.55 |

41.85 |

5.34 |

3.2 |

| 58 |

27.45 |

50.7 |

22.09 |

22.22 |

| 59 |

27.46 |

44.1 |

26.02 |

27.82 |

| 60 |

10.72 |

9.25 |

30.58 |

26.47 |

| 61 |

30.2 |

49.97 |

13.91 |

18.41 |

| 62 |

33.79 |

44.06 |

109.91 |

57.24 |

| 63 |

30.76 |

48.56 |

14.25 |

16.67 |

| 64 |

20.71 |

22.38 |

5.73 |

3.49 |

| 65 |

20.37 |

34.53 |

111.34 |

63.53 |

| 66 |

37.36 |

45.57 |

127.11 |

65.15 |

| 67 |

38.19 |

48.7 |

9.25 |

13.14 |

| 68 |

25.67 |

37.47 |

15.05 |

18.31 |

| 69 |

29.79 |

45.81 |

7.61 |

4.27 |

| 70 |

21.41 |

33.17 |

16.02 |

18.61 |

| 71 |

1 |

0 |

1 |

0 |

| 72 |

22.07 |

27.05 |

24.52 |

20.85 |

| 73 |

30.64 |

49.46 |

72.68 |

66.96 |

| 74 |

20.09 |

30.47 |

93.49 |

55.14 |

| 75 |

28.4 |

50.19 |

6.65 |

9.8 |

| 76 |

27.27 |

37.04 |

14.08 |

17.77 |

| 77 |

15.32 |

29.31 |

31.28 |

38.38 |

| 78 |

29.72 |

47.43 |

6.76 |

9.76 |

| 79 |

23.72 |

42.65 |

10.85 |

9.49 |

| 80 |

29.97 |

52.24 |

31.66 |

25.39 |

| 81 |

34.55 |

49.01 |

22.03 |

26.42 |

| 82 |

27.24 |

40.62 |

16.61 |

18.63 |

| 83 |

28.57 |

32.77 |

99.87 |

50.57 |

| 84 |

33.09 |

45.61 |

15.61 |

18.58 |

| 85 |

27.16 |

48.18 |

8.73 |

8 |

| 86 |

24.51 |

44.49 |

4.67 |

3.37 |

| 87 |

27 |

50.14 |

9.69 |

13.32 |

| 88 |

30.65 |

52.13 |

28.11 |

44.69 |

| 89 |

21.62 |

35.71 |

14.28 |

17.76 |

| 90 |

27.7 |

48 |

10.19 |

9.92 |

| 91 |

28.15 |

47.49 |

8.95 |

8.27 |

| 92 |

1 |

0 |

1 |

0 |

| 93 |

27.7 |

37.74 |

34.67 |

35.09 |

| 94 |

36.59 |

47.23 |

3.75 |

1.49 |

| 95 |

29.91 |

49.99 |

23.63 |

20.39 |

| 96 |

1 |

0 |

1 |

0 |

| 97 |

37.44 |

49.69 |

9.7 |

6.36 |

| 98 |

18.9 |

35.19 |

17.85 |

19.89 |

| 99 |

31.82 |

49.52 |

32.99 |

20.77 |

| 100 |

23.79 |

39.27 |

34.01 |

17.46 |

| 101 |

29.15 |

50.93 |

9.07 |

8.7 |

| 102 |

34.7 |

47.82 |

6.42 |

5.27 |

| 103 |

37.67 |

48.72 |

60.97 |

33.82 |

[/expand]

It seems that in some cases, a node might be very hard to get to, and therefore has a high number of hops, however it could also be the case that because the node is not seen very often, then the messages just keep moving around different nodes. The way to see this would be to take measurements of the the hop count (as above) at time intervals. However, it would be best to first fix the algorithm to see if the behaviour continues, then consider further analysis.

Problem Found/Solution

After a bit of bug tracking and debug output scouring, we were able to find two problems. The first, a simulator problem meant that community file (numbered 0 to n) were being loaded in an incorrect order, which meant the internal numbering of communities did not match the file names:

[expand title=”view ordering”]

community.0.dat

community.1.dat

community.10.dat

community.11.dat

community.12.dat

community.13.dat

community.14.dat

community.15.dat

community.16.dat

community.17.dat

community.18.dat

community.19.dat

community.2.dat

community.20.dat

community.21.dat

community.22.dat

community.23.dat

community.24.dat

community.3.dat

community.4.dat

community.5.dat

community.6.dat

community.7.dat

community.8.dat

community.9.dat

[/expand]

The second problem was a little less subtle, it was caused by the behaviour of the isBetter function when considering the level of a community in the hierarchy and the number of members in a community. When an upper level community had a smaller membership than a lower level one, the message tended to be flip-flop’d between communities. This was temporarily fixed by only considering the size of a community when the level was the same.

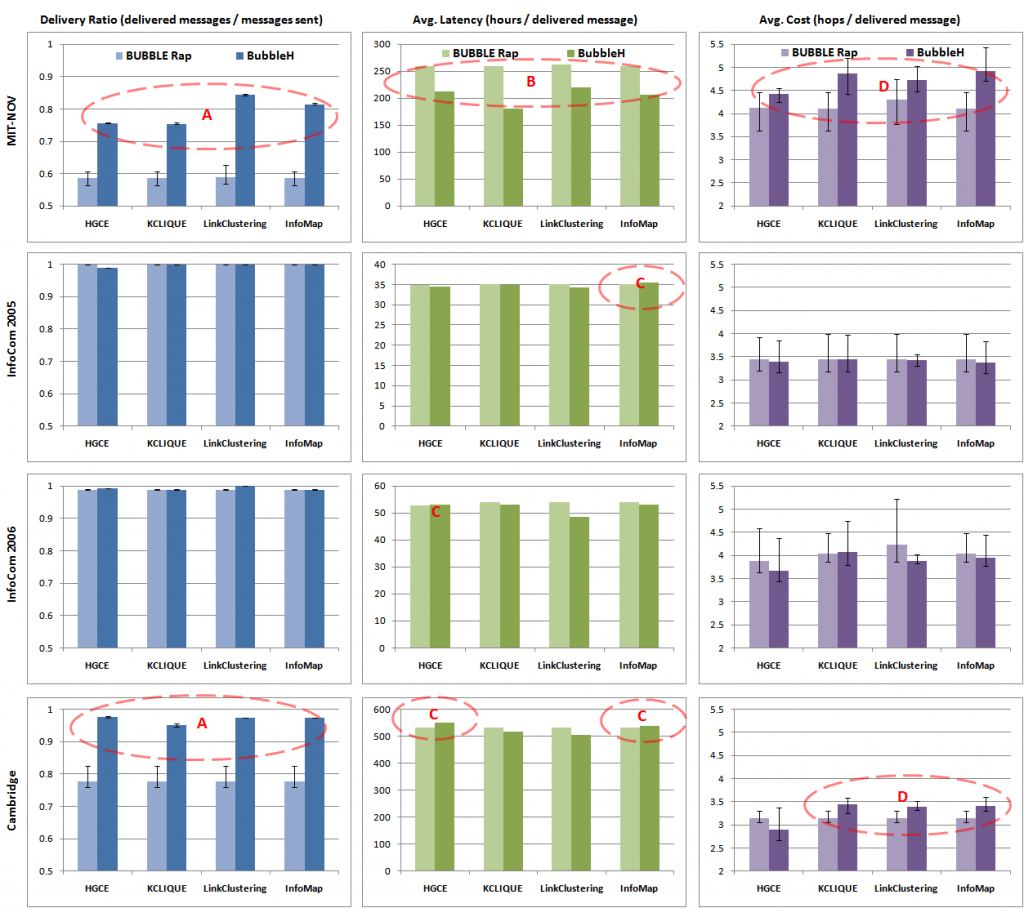

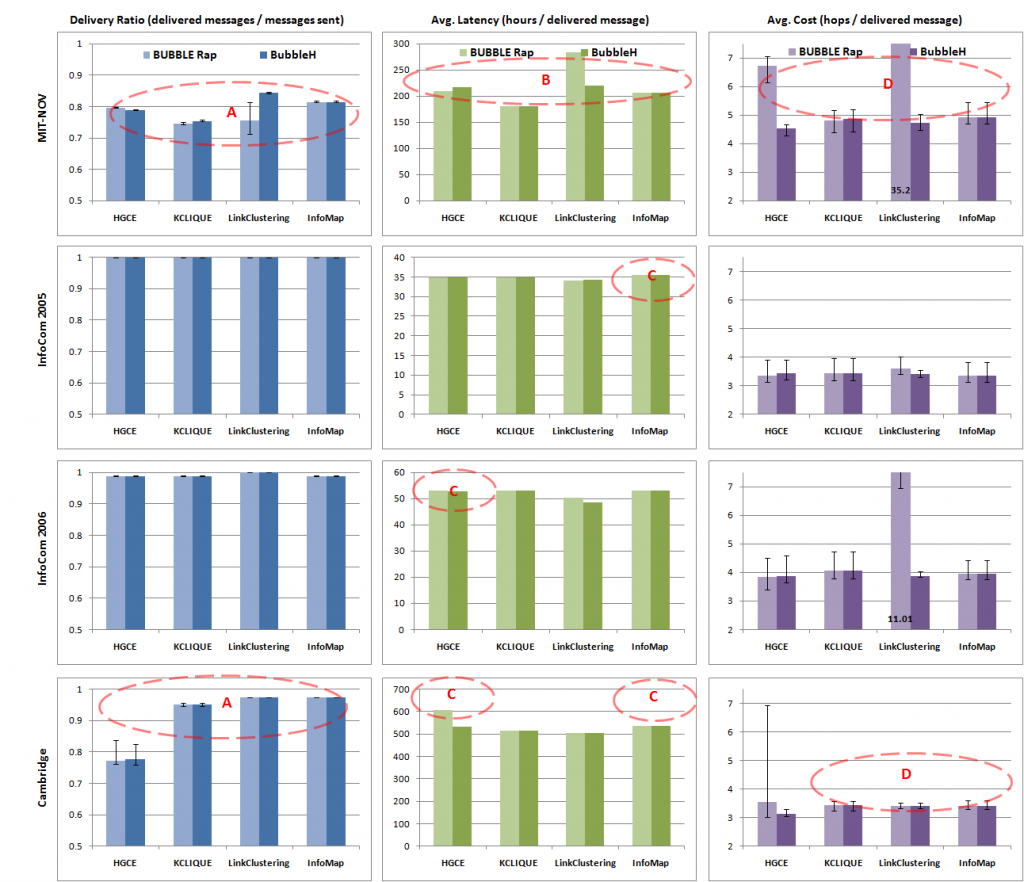

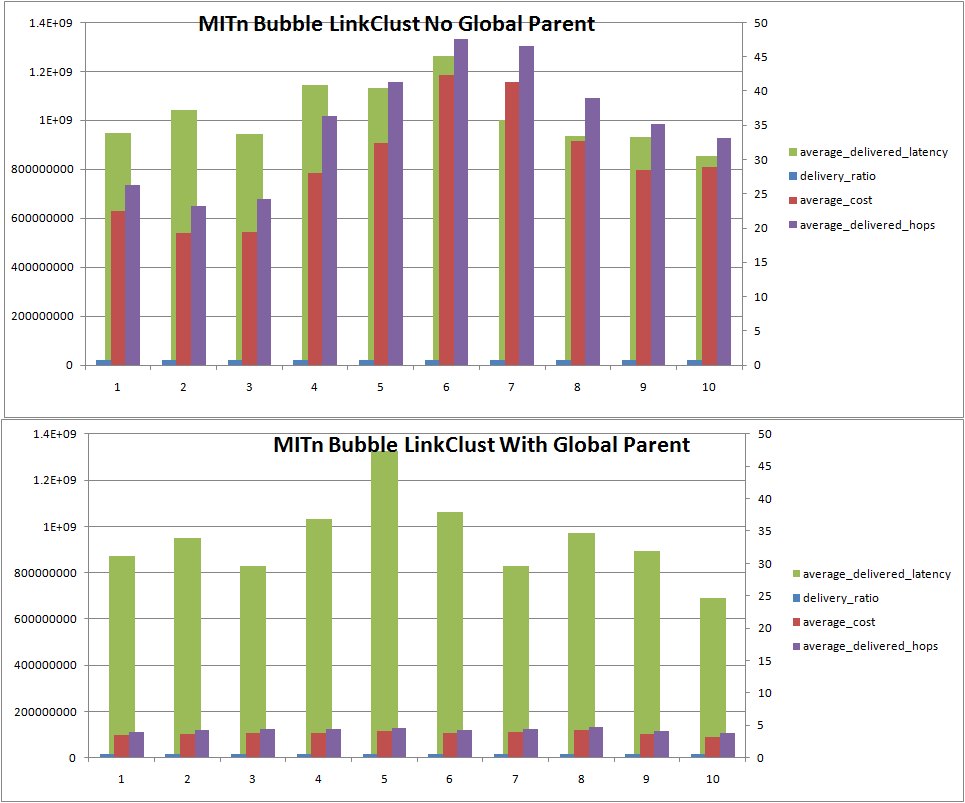

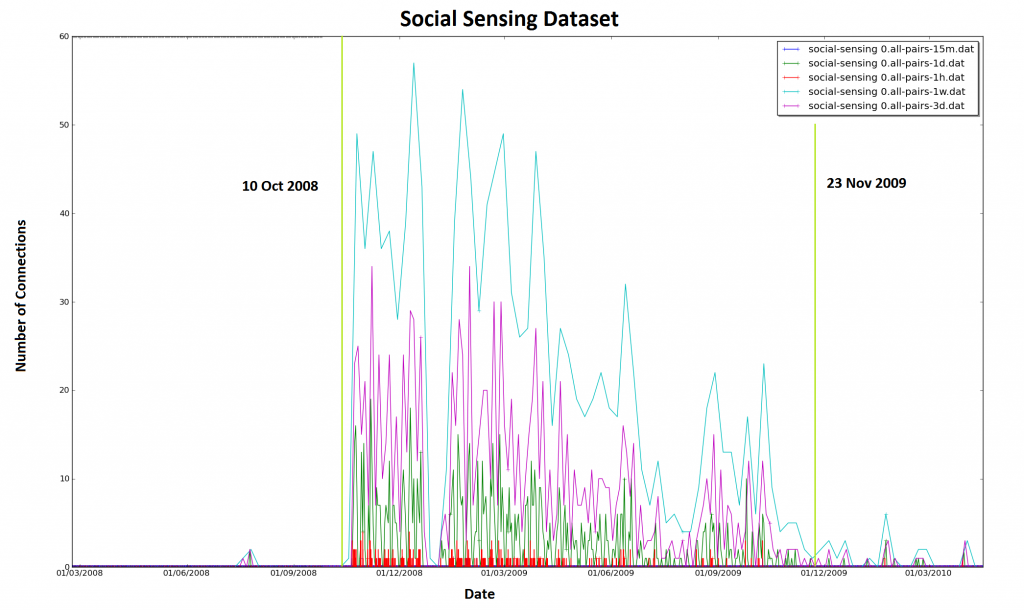

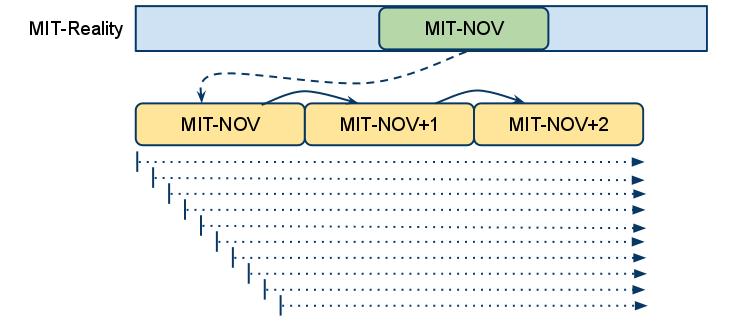

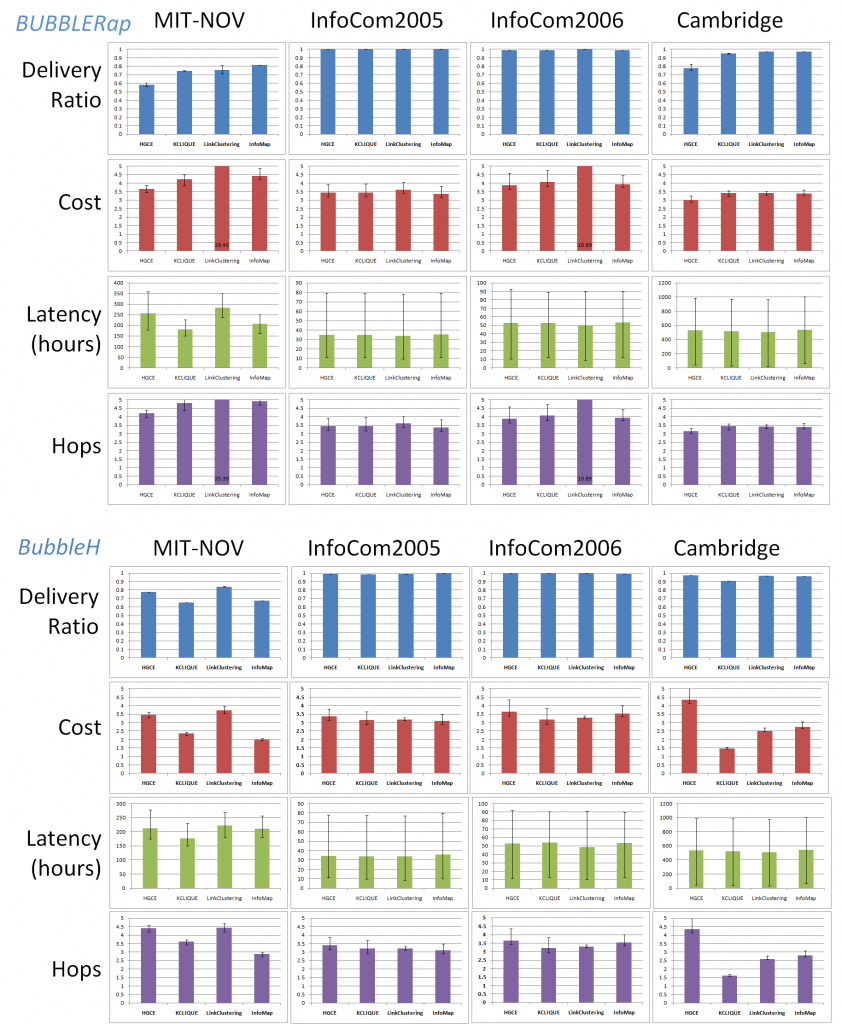

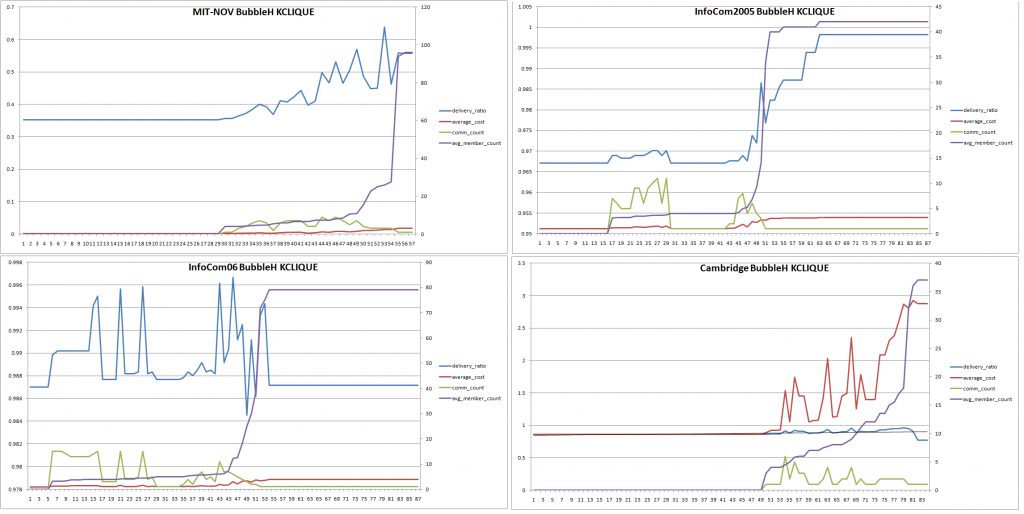

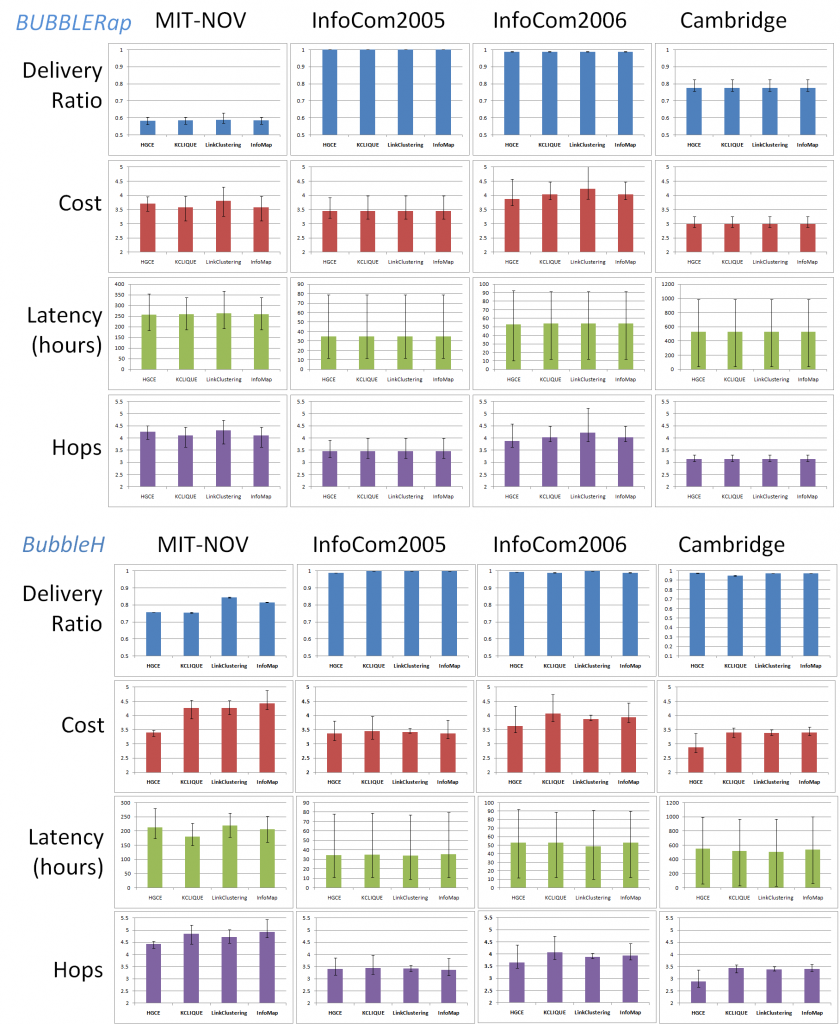

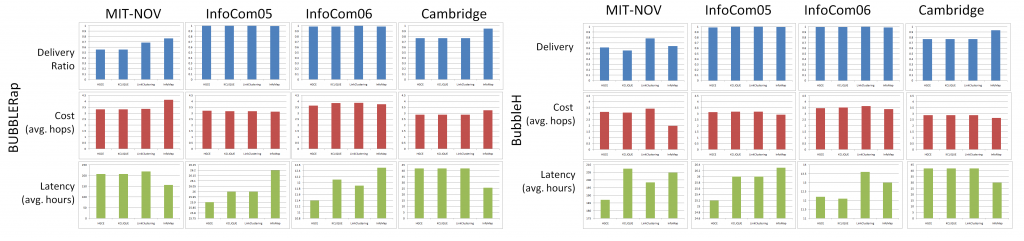

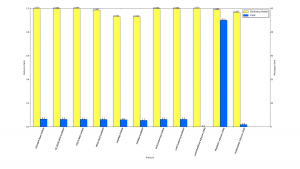

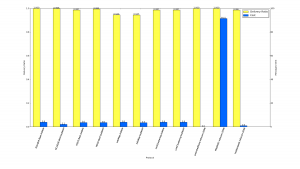

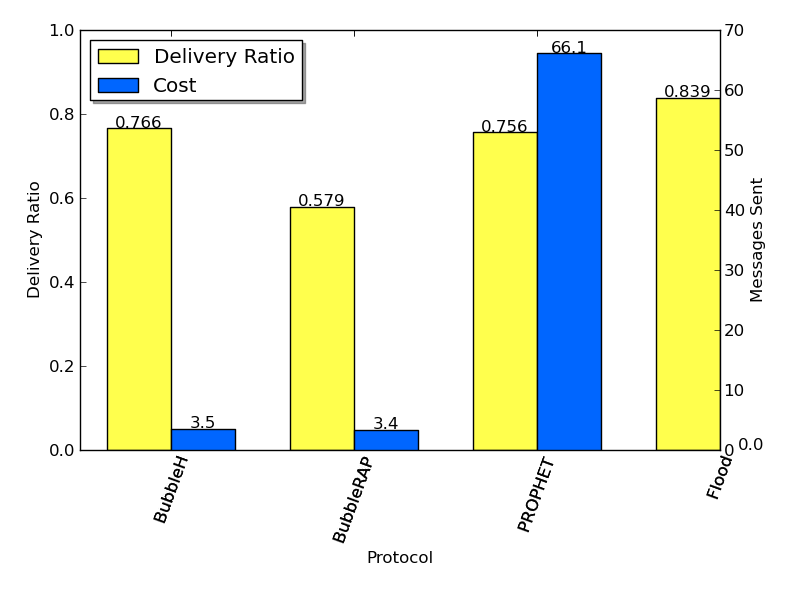

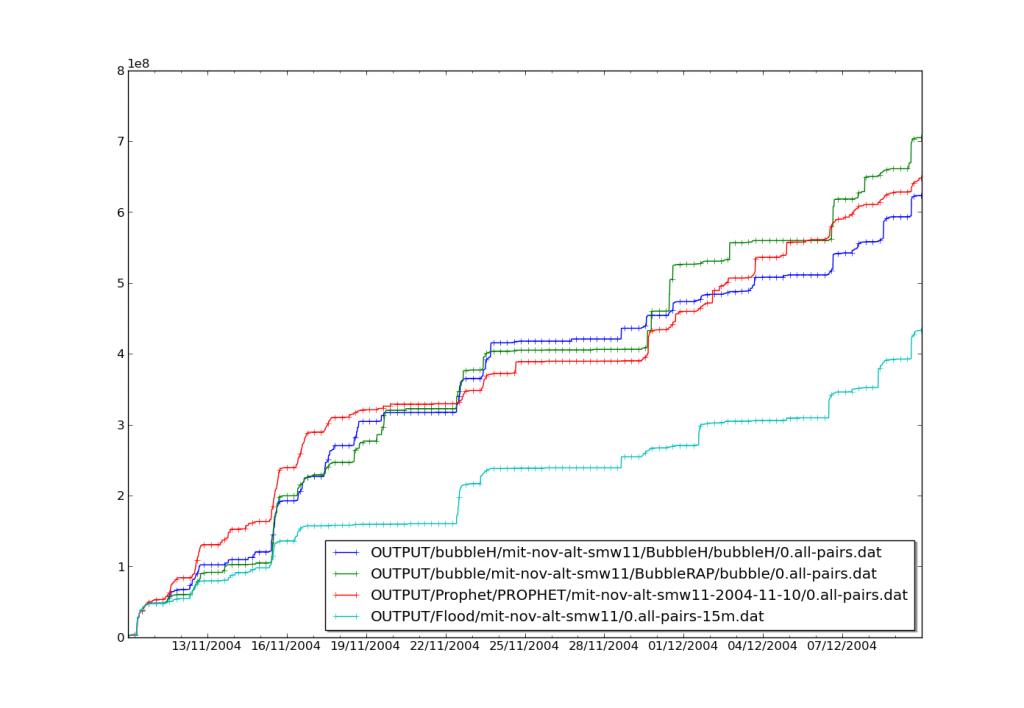

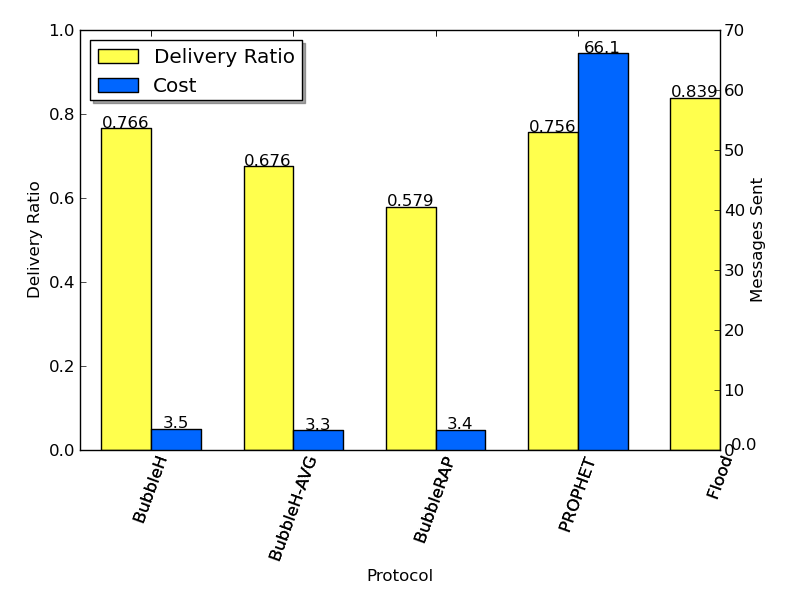

Results after this fix (for both BubbleRAP and BubbleH) are shown below:

However!

We have since decided to test what happens when we ignore the depth of a community, and only consider the member count, this might have the effect of flattening the hierarchy somewhat, but it simplifies the algorithm… watch this space for results.